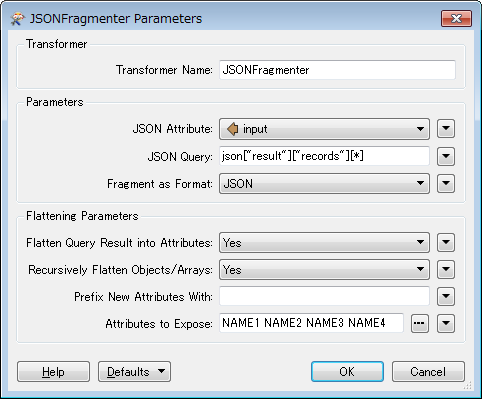

I'm extracting data nightly from JSON services of which have no validation. These datasets have over 100 columns. Is there way to import a schema into the attribute validator so for every column it would test data type and max length? It would take forever to test data type, structure and max length for every column in every dataset, but my fme processes keep failing.

Reply

Rich Text Editor, editor1

Editor toolbars

Press ALT 0 for help

Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.