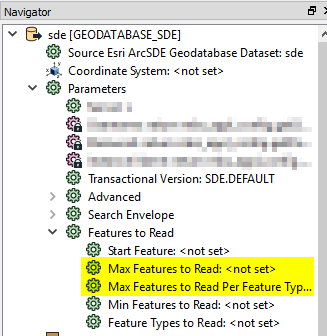

I have a workbench that reads features from a large database to find feature that match a certain set of criteria. I only need 2000 features to be output. At the moment I use a counter to count the matching features and only route features to the writer whilst the count is less than 2000. However the workbench continues to read features from the database until all features have been read. What I would like to be able to do is to stop the reader when 2000 suitable features have been found.

The workaround I have at the moment is to guess at how many feature I will need to check and set that as the maximum number of features to read. It can take several hours to find 2000 suitable features and several hours more to continue reading the remaining features that are not required from the database. I already have a reader Where clause to do as much filtering on the database as possible