Hi all,

I’m working with a Feature Service containing approximately 1,000,000 sensors stored in a spatiotemporal big data store. I need to update 10,000 of these sensors based on their unique string ID, like sensors-0x21222.

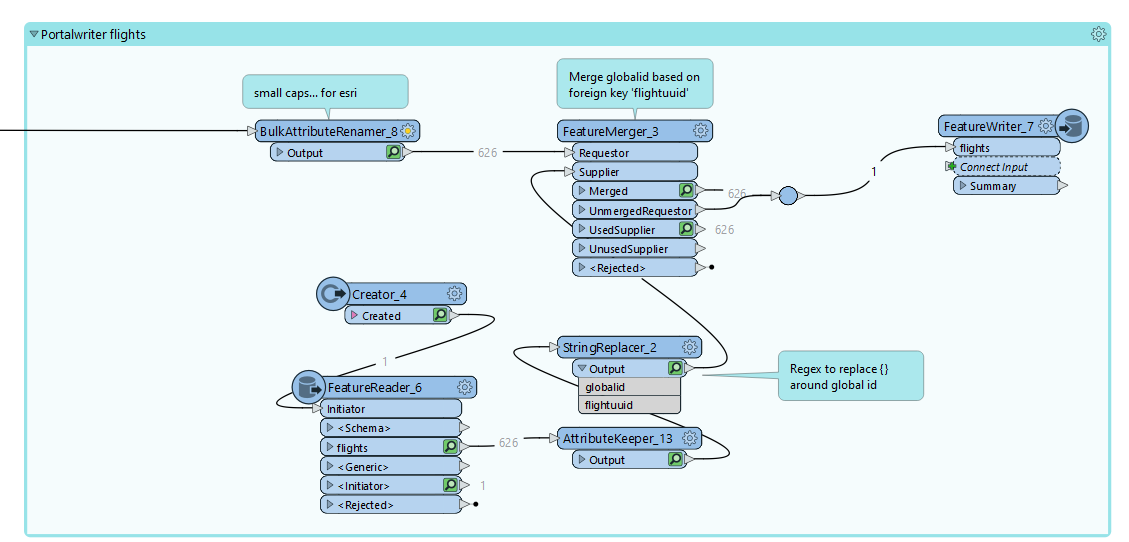

Currently, I'm using the FeatureWriter for the 'Esri ArcGIS Portal Feature Service' with the 'Use Global IDs' option enabled and 'Upsert' set as the 'Feature Operation' in the Feature Type. However, 'Upsert' requires a global ID.

This approach feels very constrained, as it requires importing all 1 million records, merging them, and finding the global ID for each update.

Is there a way to update a Feature Service using a primary key or unique field (such as upserting with a where clause) rather than relying on global IDs? This current method doesn’t seem ETL-friendly, as it requires importing parts of the dataset just to make updates.

Thanks in advance for any guidance!

Edit:

Maybe I don't understand it. But for every featureservice/layer/featuretype I need to add, to first fetch the globalids before I can do in upsert/update

If you need to update lets say 10 featureservices and big numbers of features it just feels wrong.