Hi,

I am new to FME. I currently have 286 old gdb that consist of 15 feature classes, they needs to be updated to a new gdb. Some of the updates includes: changing a point to polygon, merging feature classes, changing fields name, and also mapping value for subtype. I have already figure out how to update an individual gdb.

Right now, I am playing around with Workspace runner and Directory and File pathnames reader. I am uncertain about some of the parameters.

In my workspace, for my reader and writer, does it matter if I load any old gdb as the reader? and any new gdb as the writer?

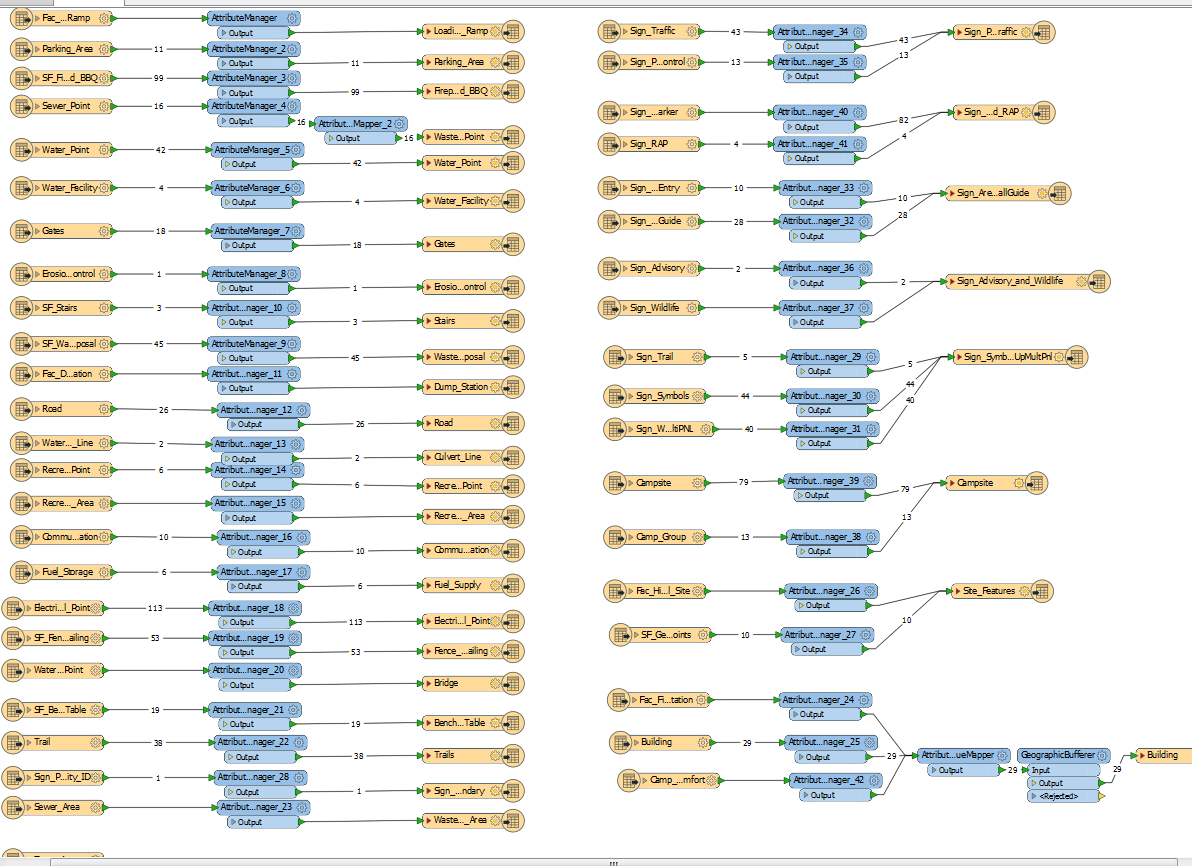

I have attached below what my current workspace looks like.

Can you please help me out? It would be very much appreciated :-)

Thanks,

Vincent