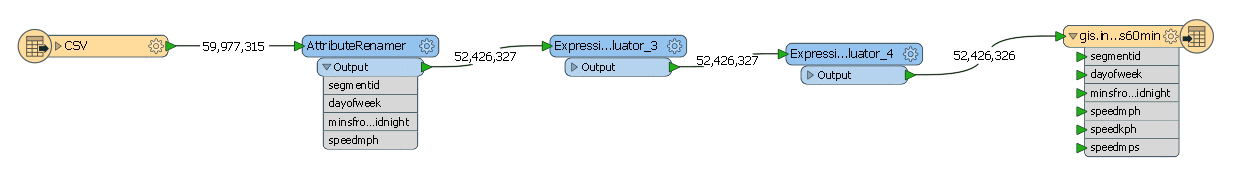

I'm using FME Desktop 64-bit (v 2017.1.2.1) and trying to read a large CSV file (approx 1gb and 60 million records) into a PostgreSQL database table with no geometry involved. The only transformers I am using are an attributerenamer to map the fields and two expressionevaluators to calculate KM/hr and Metres/Sec from Miles/Hour (see below).

My issue is that running the workbench consumes most of the 8GB RAM available on the VM and this appears to be due to the CSV reader reading in all the records prior to the first transformer (the attributerenamer). I ran the workbench overnight and it failed due to an FME app crash at approx. 52 million records processed and I'm assuming this was memory related as the last few lines of the log are:

2018-03-03 01:20:55|17816.4| 1.0|STATRP|AttributeRenamer(AttrSetFactory): Processed 52421236 of 59977315 features

2018-03-03 01:20:56|17818.1| 2.8|STATRP|ResourceManager: Optimizing Memory Usage. Please wait...

2018-03-03 01:20:56|17818.1| 2.8|INFORM|ResourceManager: Optimizing Memory Usage. Please wait...

2018-03-05 12:11:39|17818.2| 0.1|WARN |Warning: not all FMESessions that were created were destroyed before shutdown. This may cause instability

2018-03-05 12:11:39|17818.2| 0.0|WARN |Warning: not all Stashed Objects that were registered were dropped before shutdown. This may cause instability

My question is, why is FME using so much memory reading from the file?

I'm not using any group level transformers that would usually require all the features to be held in memory, so surely the records can be read from the CSV, processed and disposed in sequence, with very little memory utilised?

Despite the large file size, I was expecting FME to handle this much better.

Cheers

John