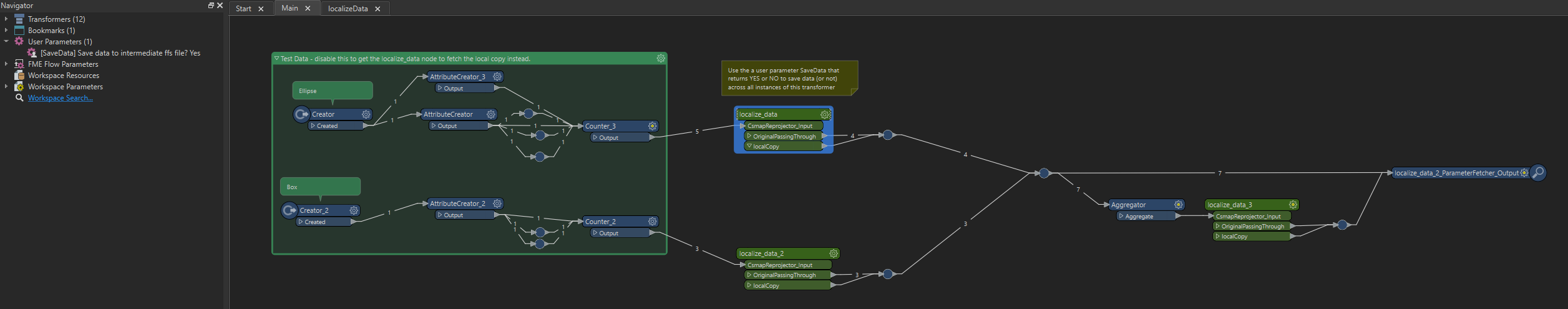

This is supposed to be a quality of life improvement when working with unreliable data providers or when you have unreliable internet connection. I hope this functionallity exists in FME already but I could not find it.

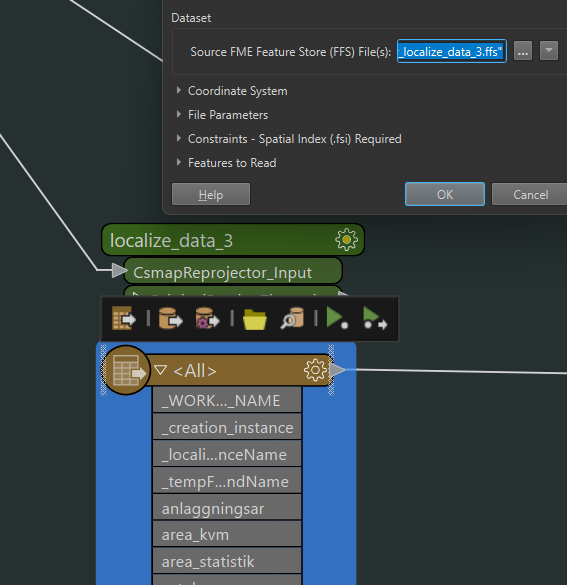

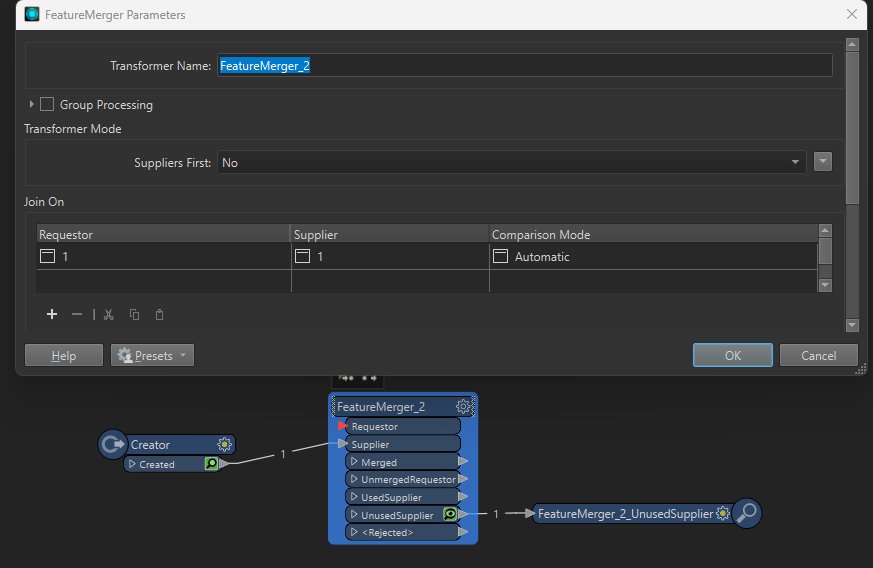

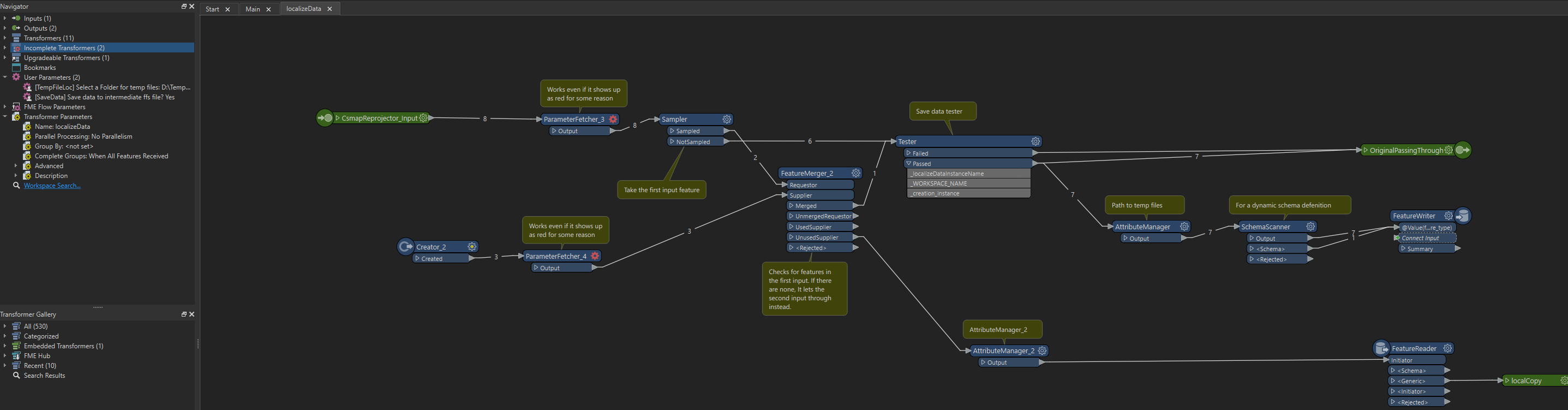

I am trying to make a custom node that saves the data stream it intercepts locally and reads that data instead if the original source doesnt come through. There are two problems that I have been unable to solve. One is that i have to run the script with feature cashing to get it to save the files and there is an error upon saving, it does not seem to understande the grouping for some reason:

localize_data_2_FeatureWriter (WriterFactory): Group 2 / 2: MULTI_WRITER: No dataset was specified for MULTI_WRITER_DATASET or localize_data_2_FeatureWriter_0_DATASET or FFS_DATASET

The second problem is dynamically reading the files and restore the exposed attributes. To with I can not find any solution yet. I just want to apply the same attributes again, maybe i could use a saved schema file somehow to connect to the reader? I uploaded the script, if anyone has any ideas. (Or even knows if this function already exists in FME?)

Sincerely,

Robin