Hi,

I appear to have an issue with a FeatureWriter failing when writing a certain amount of features.

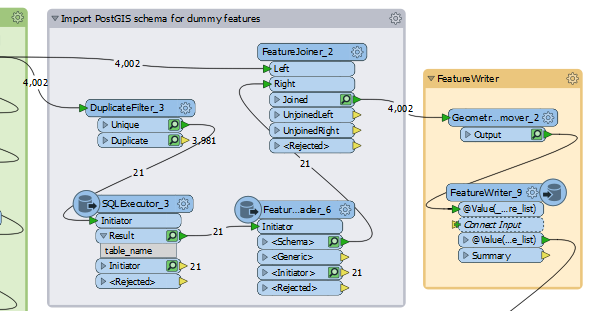

I have a list of features which I want to write some dummy data for into a Feature Writer. For each entry in the list I fetch 1 object (using a Sampler) and the relevant schema from PostGIS tables and then use a FeatureJoiner to match the list feature to the object and schema feature. After that I remove the geometry and feature values so each feature in the list has just one empty object. A lot of features, but very little actual data.

I then write everything to the FeatureWriter. This works absolutely fine when the list is only about 500 items long, so there doesn't appear to be anything wrong with the process, but when I'm testing lists of about 4000 features the Writer seems to timeout and fail on a random feature.

The error messages I'm getting are along the lines of:

Geodatabase Error (-2147220987): The user does not have permission to execute the operation.

FileGDB Writer: A feature could not be written

Feature Type: `J_080M_GDE_MINEX'

*Then it lists all of the attributes*

FeatureJoiner(FeatureJoinerFactory): A fatal error has occurred. Check the logfile above for details

Is this a timeout issue, and can it be resolved?

Regards