I have an FME Cloud Starter instance, 2019.1.3.1, with 2 engines which, at the moment, is running mainly one workspace with an average processing time of 20-30 seconds on a 1-minute schedule plus some other (10 at most) jobs either on a schedule or ad-hoc. There are no job queues in use so I would expect each of the two engines to be used "about 50%" of the time.

However, it recently (with the addition of that workspace on the 1-minute schedule, replacing an ad-hoc workspace with similar load figures) started to show a preference for running many jobs in a row on the same engine.

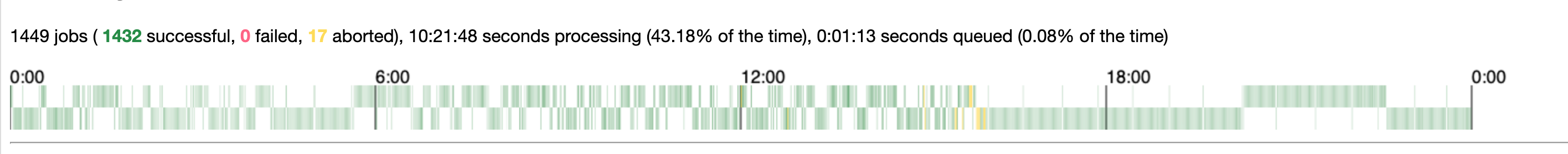

This load graph from yesterday shows it clearly and I'm especially surprised by the sudden switch to running almost all jobs in a given hour on the same engines.

Of the 500 jobs on the completed page right now, 347 have been run on Engine2, that includes a whole section of this morning where only about 1 in every 30 jobs was run on Engine1.

Is there a logical explanation for this?