Hi,

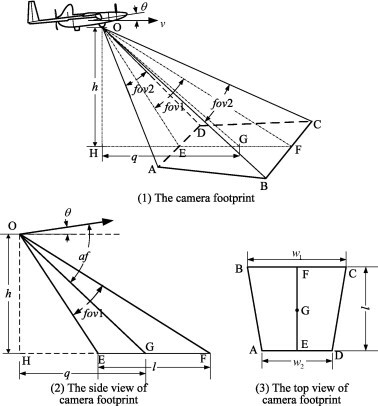

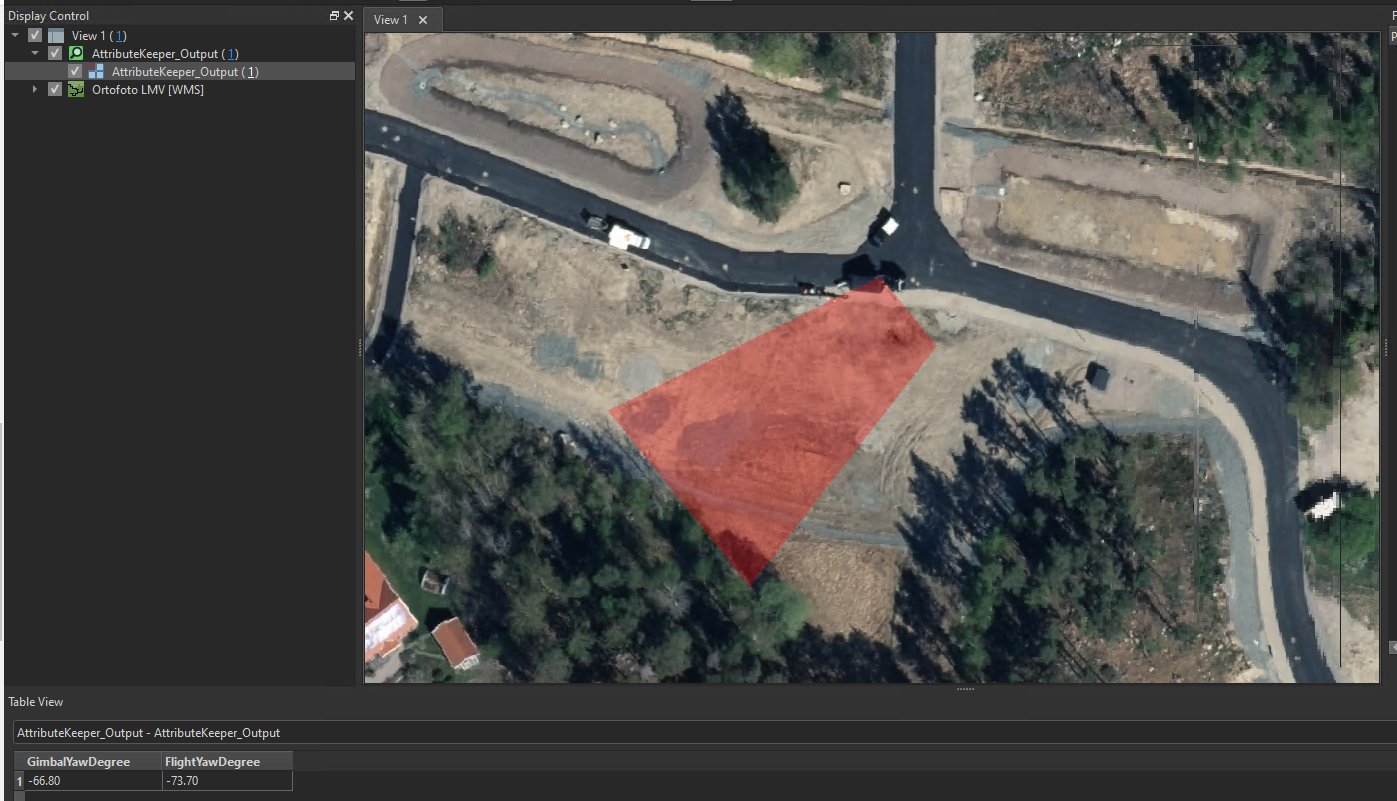

We would like to be able to calculate the real world footprint of drone images to result as a trapezoid polygon. This should be possible with only the exifdata that we get from the custom transformer "exifReader" as far as I can tell.

I found a python version here, but it requires additional installation of vector3d from the python script. Anyone got a solution to calculate drone image footprints?

https://gist.github.com/luipir/dc33864b53cf6634f9cdd2bce712d3d9

The custom transformer FootprintCreator promises something similar but requires a CSV-file from the camera. Anyone got a suggestion/solution? It would be a neat transformer that I think a lot of people would benefit from. We have a couple of different DJI drones.

Any ideas? Could the problem lie with our drone readings or is there something else? Have you had the chance to test the workflow on any drone images you have?

Any ideas? Could the problem lie with our drone readings or is there something else? Have you had the chance to test the workflow on any drone images you have?