Hi all,

I have a workbench which takes a CSV, creates vertex's and then publishes to ArcGIS Portal. This works fine and I can view the data in Portal, but it fails if I try to run the workbench again to overwrite the feature... which is something I need to do every morning.

I get this error:

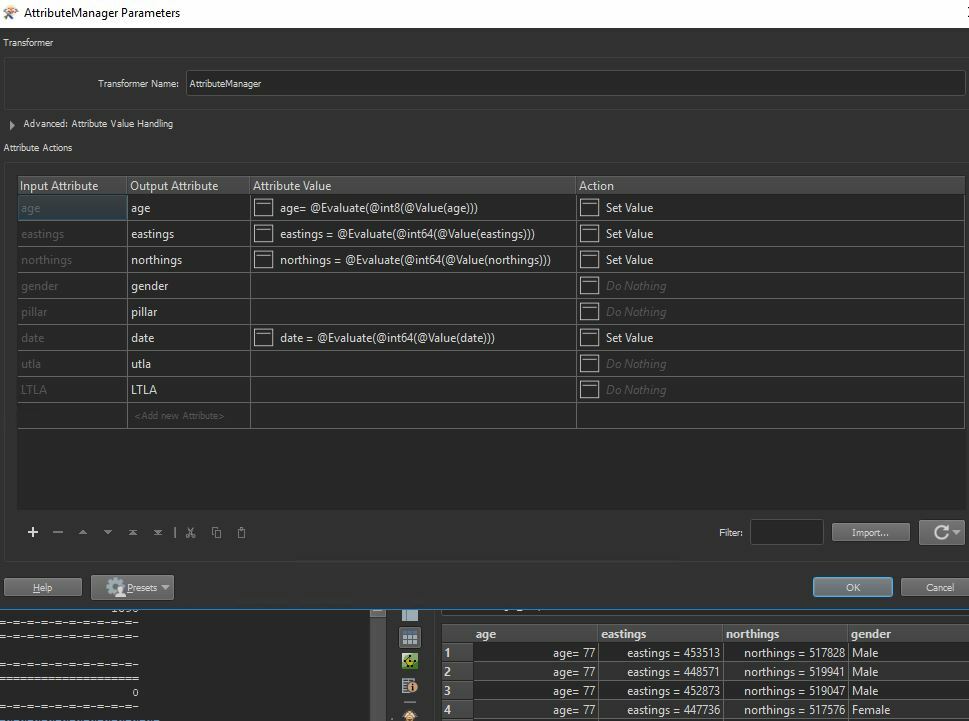

ArcGIS Portal Feature Service Writer: 'addResults' error for a feature in 'COVID_Joined_Tables'. The error code from the server was '10500' and the message was: 'Cannot convert a value of type 'java.lang.Double' to 'SMALLINT'.'

I have the Portal writer set to INSERT and to Truncate first YES, which I think is the right setting to be using?

I guess it is something to do with Portal converting a field type when it get's created, and it finds a mismatch when trying to overwrite it.

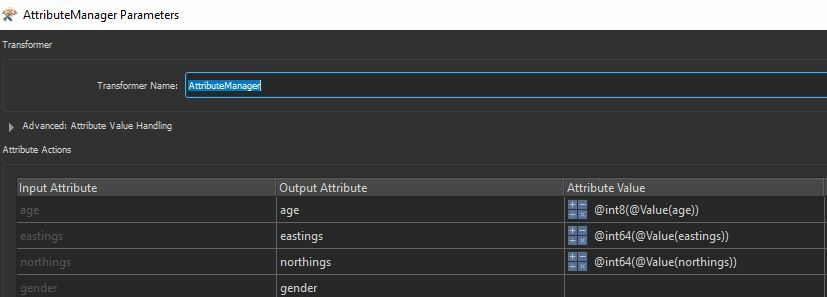

I have checked the attributes of the fields and only one field is down as SmallInteger, so I tried changing that thinking that may have been the field causing the problem, but no luck. I have attached a picture of this and also the overall workbench.

Any ideas please?

Thanks, Dan

Hi

Hi