Hi

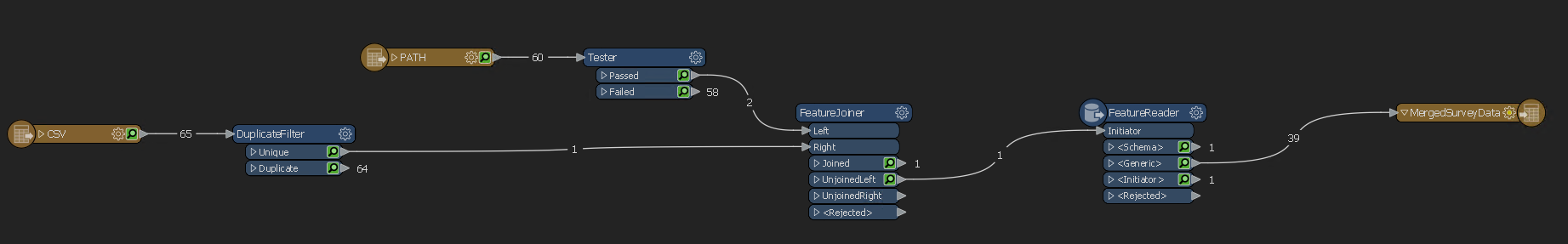

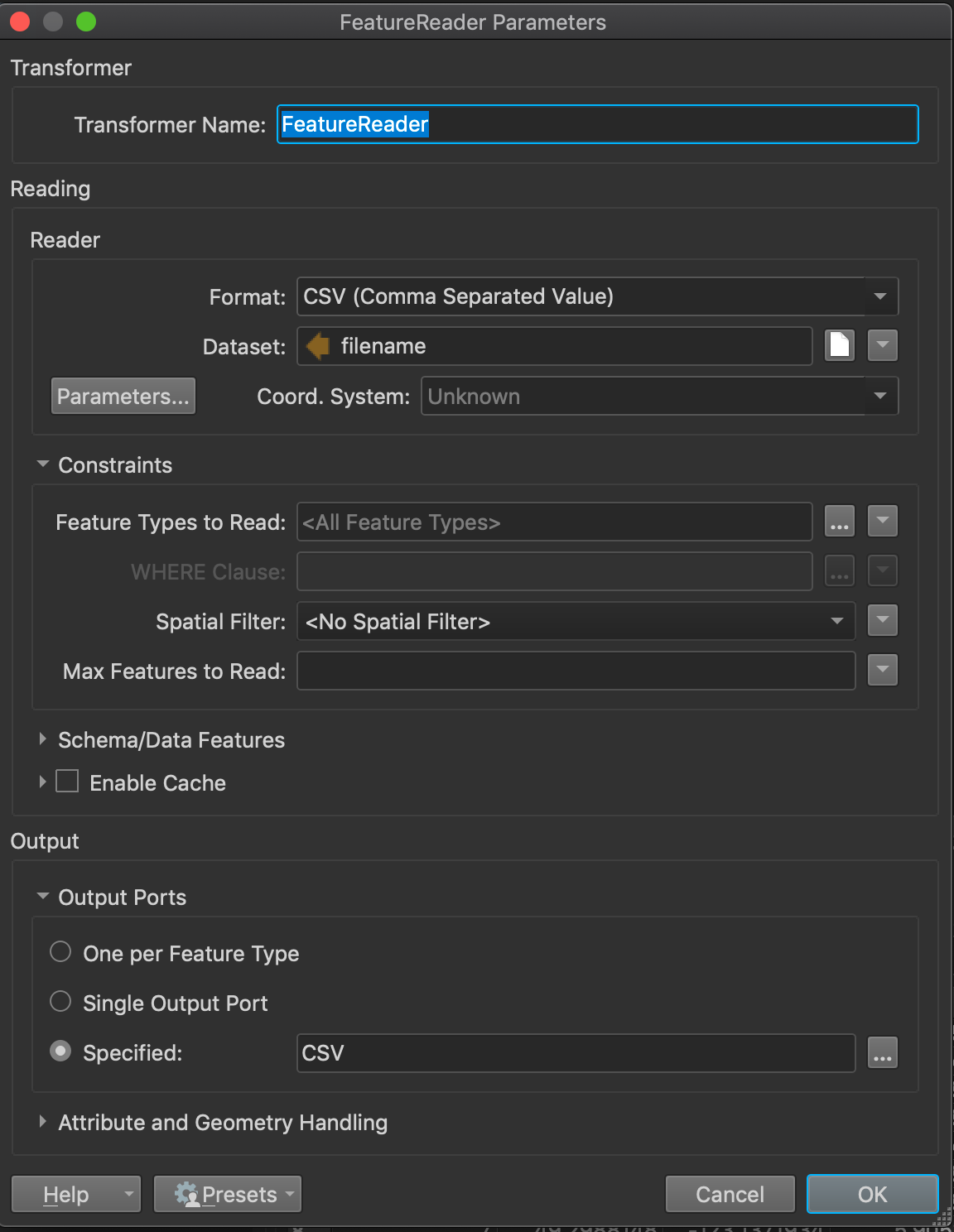

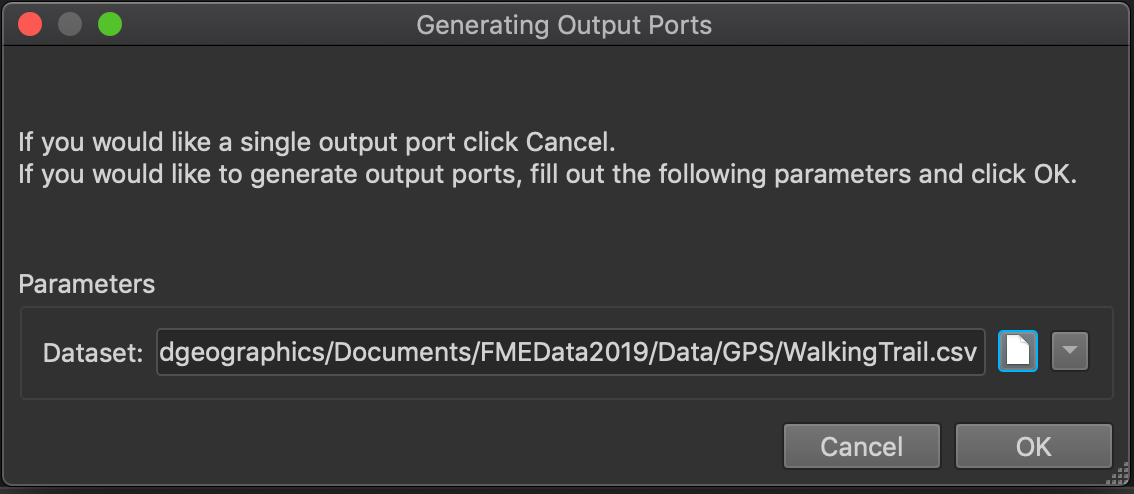

We are recieving a daily csv from a supplier and I would like to merge them all into one file (ideally write to a sql server database but could just be a csv). I have enabled the reader to read everything in the folder, which works fine. However, if I run this every day, it will become increasing inefficient to truncate the table being written and update it.

Is there a way that I can check if a file has already been written and then just append ones that haven't?

Thanks

Mark