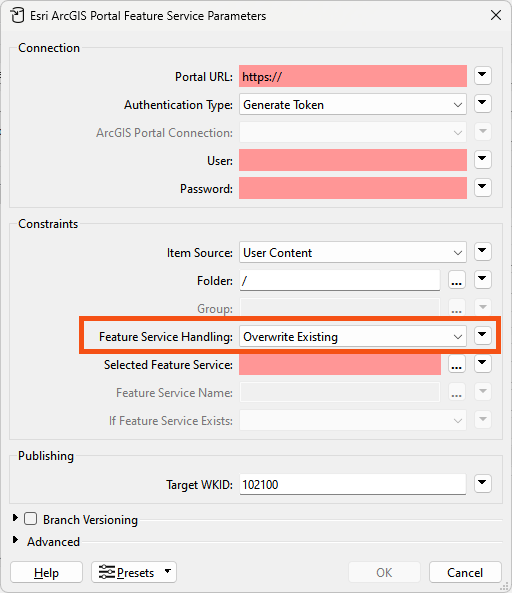

I am trying to see if I can update a feature layer in our ESRI Portal from a FGDB. We know we can insert, update, and delete features and have success on those fronts. However, we will like to also have dynamic schema available as we may change the data in our FGDB by removing/adding fields. I have tried several times to get a dynamic writer working to account for a schema change but without luck. I have tried to delete data in the feature layer to then insert but that had errors.

Does anyone have advice or has done this?

The FGDB is a testing stage as eventually we would want to update directly from SDE.

Question