I'm have created a storage class and persistent volume according to their documentation (https://docs.safe.com/fme/html/FME-Flow/AdminGuide/Kubernetes/Kubernetes-Deploying-to-Amazon-EKS.htm)

This is my helm install command:

helm install fmeserver safesoftware/fmeserver-2023-0 --set fmeserver.image.tag=21821-20220728 --set deployment.ingress.general.ingressClassName=alb --set storage.fmeserver.class=efs-sc --set deployment.deployPostgresql=false --set storage.fmeserver.accessMode=ReadWriteMany --set fmeserver.database.host=mycluster.rds.amazonaws.com --set fmeserver.database.adminUser=user --set fmeserver.database.adminPasswordSecret=fmeserversecret --set fmeserver.database.adminPasswordSecretKey=password --set fmeserver.database.user=user --set fmeserver.database.name=fmeserver --set fmeserver.database.password=password --set fmeserver.database.adminDatabase=postgres --set deployment.startAsRoot=true -n gis-fmeserver

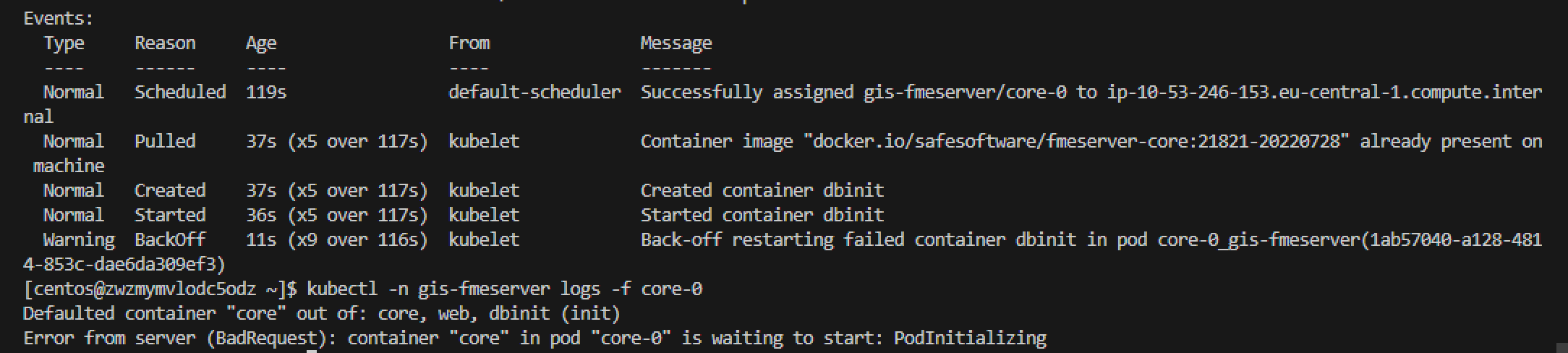

Now my core pod is failing with the following details:

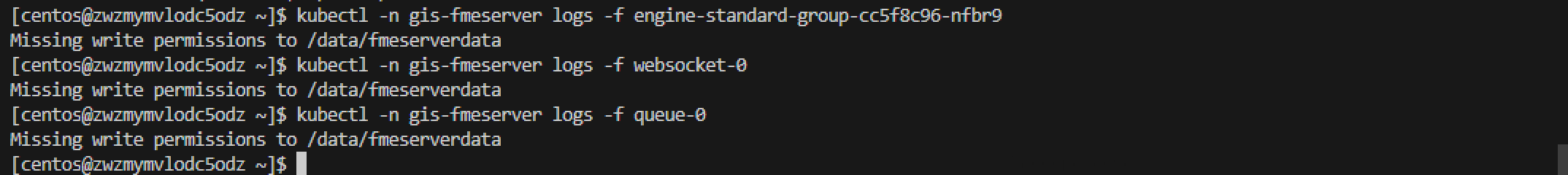

All the other pods are failing with the following errors:

All the other pods are failing with the following errors:

Note that the EFS being used here is also in use by other services. Please share any insights that will help me with fixing these issues.

Note that the EFS being used here is also in use by other services. Please share any insights that will help me with fixing these issues.