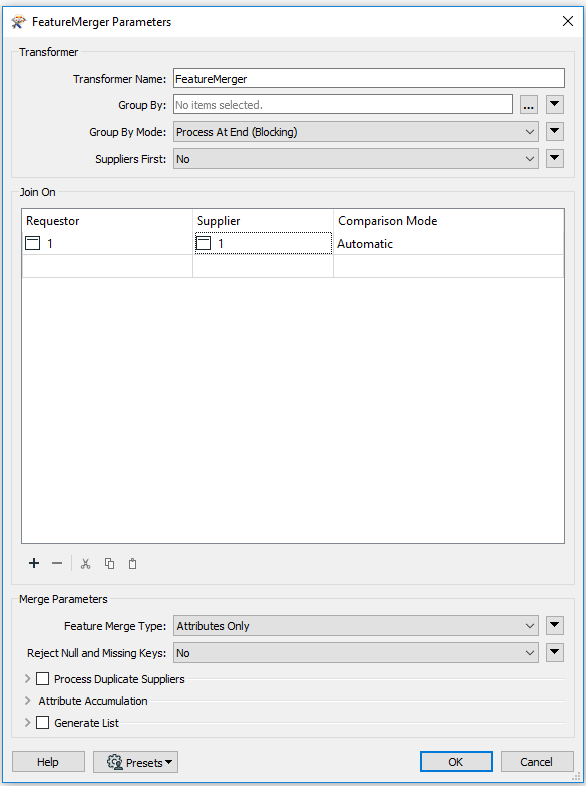

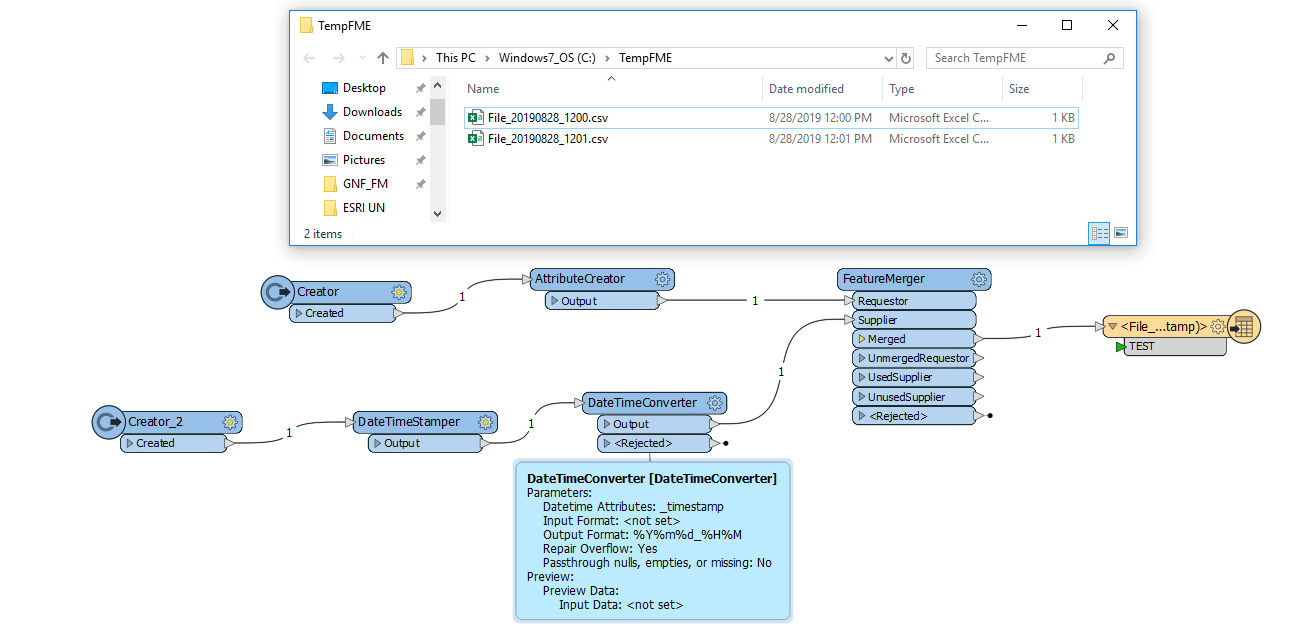

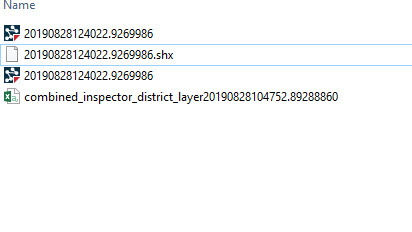

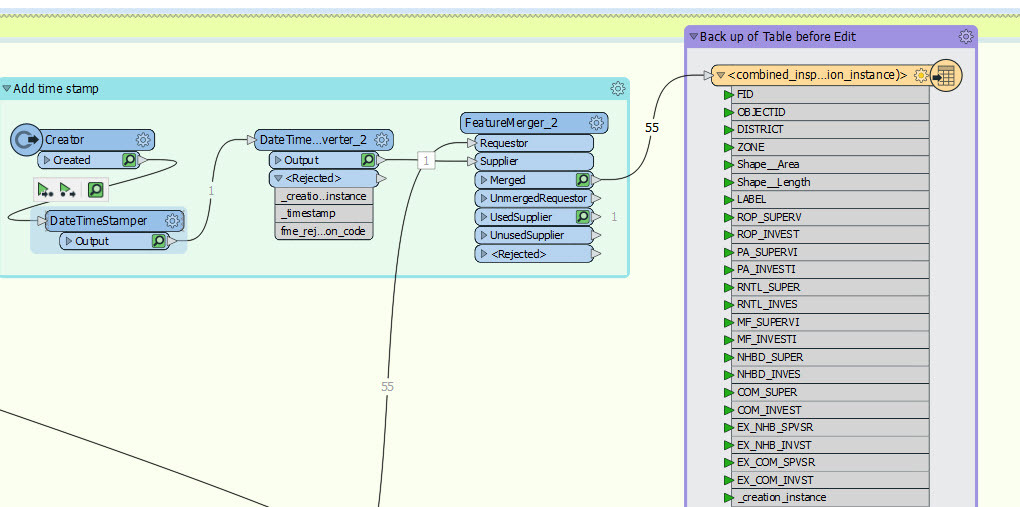

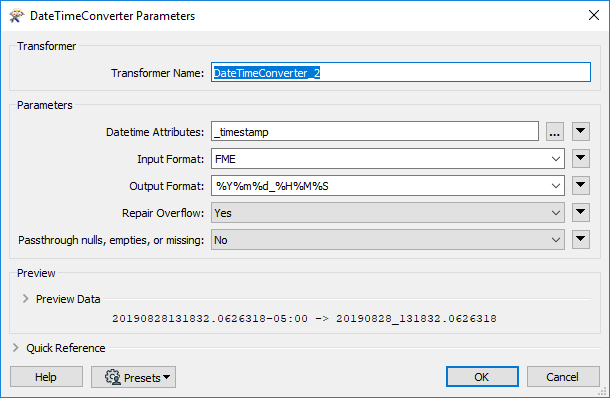

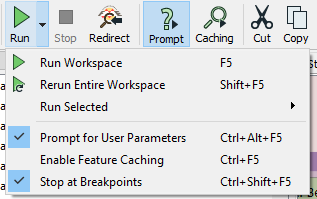

I am attempting to write to CSV file where the filename includes date/timestamp. My situation is the same as the question here: https://knowledge.safe.com/questions/35824/write-csv-file-where-filename-includes-datetimesta.html. I've tried adding the timestamp to the name but it produces a CSV for each row of the original table. I tried a parallel Creator>DateTimeStamper> but it generates two CSVs. I tried using a FeatureMerger but I'm confused as to what to join on; My options are to join on _timestamp and a column from the source data, which don't match. Should I ask a new question for this particular instance of the problem or try posting on aforementioned thread?

This post is closed to further activity.

It may be an old question, an answered question, an implemented idea, or a notification-only post.

Please check post dates before relying on any information in a question or answer.

For follow-up or related questions, please post a new question or idea.

If there is a genuine update to be made, please contact us and request that the post is reopened.

It may be an old question, an answered question, an implemented idea, or a notification-only post.

Please check post dates before relying on any information in a question or answer.

For follow-up or related questions, please post a new question or idea.

If there is a genuine update to be made, please contact us and request that the post is reopened.