Is it possible to run workspace runner with “wait for Job to compete” = YES and setting a number of the concurrent processes? It should work the same way as “wait for Job to compete” = NO with the only difference on the time of showing the success or failure of the process run.

Question

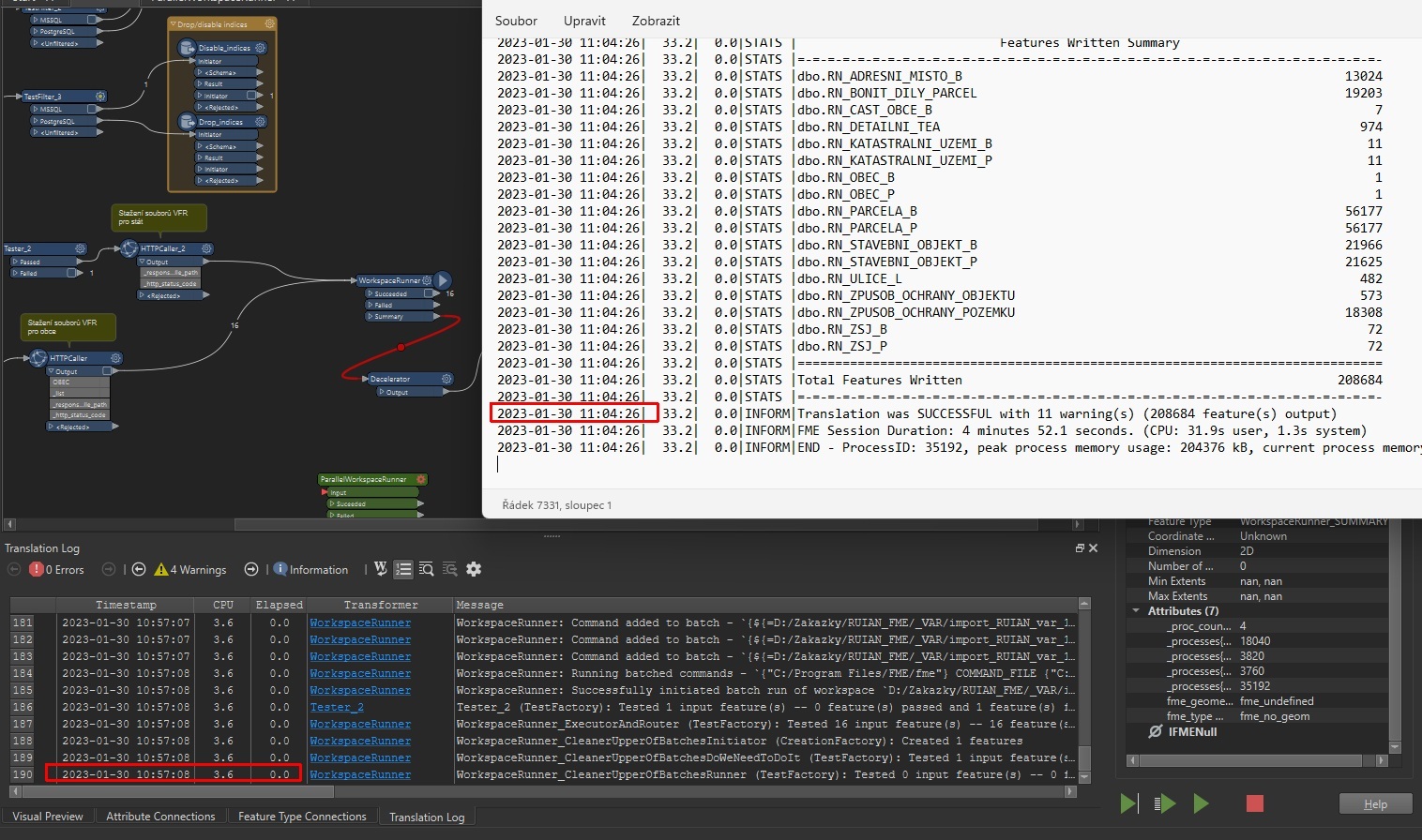

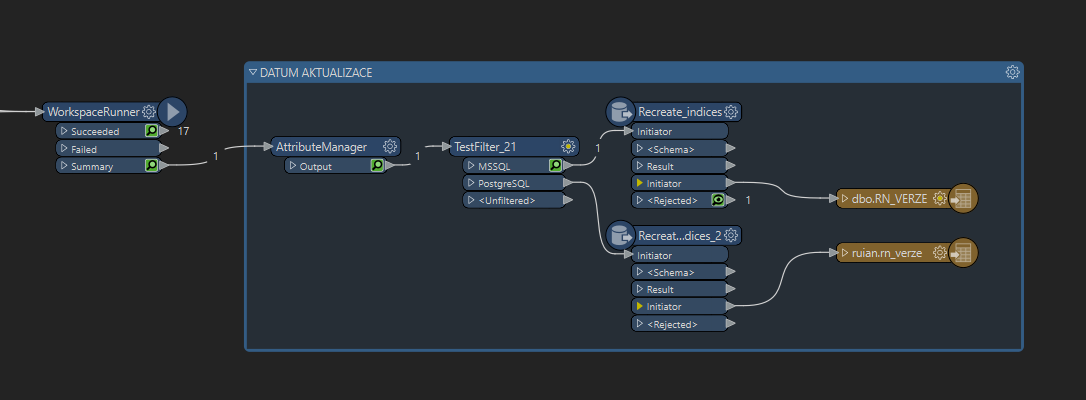

Hello! I have more or less the same request: I'm fetching a series of XMLs through parallel processing of WorkspaceRunner, I need to recreate the indices on DB after the import. This workflow is connected to the Summary port of WorkspaceRunner. The problem is the Summary port fires after the last WSRunner begins, it's not waiting for every job to finish. I would need to continue after all jobs are done.

Hello! I have more or less the same request: I'm fetching a series of XMLs through parallel processing of WorkspaceRunner, I need to recreate the indices on DB after the import. This workflow is connected to the Summary port of WorkspaceRunner. The problem is the Summary port fires after the last WSRunner begins, it's not waiting for every job to finish. I would need to continue after all jobs are done.