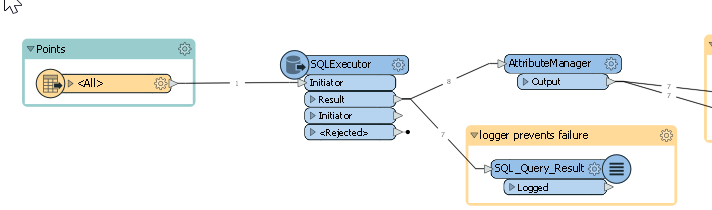

I have a sql executor using an ODBC connection with parameters in it. The params are fed by published params and an inbound reader [into the executor]

Only a few small columns are returned and typically less than 200 rows.

-When I run the query with params it errors about every other time. Not much detail in verbose just 'Error running translation.' Then it terminates.

-When I attach a logger to the result of the SQL executor the translation never errors.

-When I hard code the sql executor [just for testing with no params] it never errors. Unless I have nothing attached to the 'result'.

- In short the translation works with a logger attached to the 'result' of the sql executor regardless of params or no params, it fails with nothing attached to the 'result' regardless of params, and it works occasionally with params and no logger attached.

I can run the translation in production with a logger attached but why do I need to? And why doesn't it work without one?