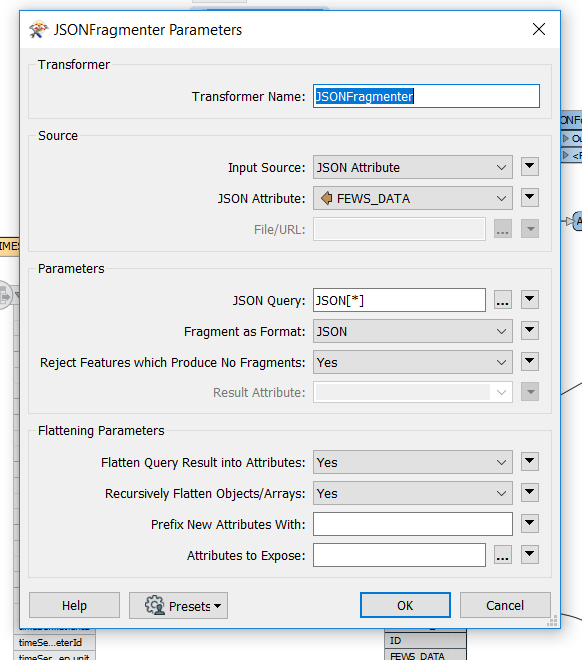

I have a workbench which takes a file of JSon using the JSON reader and this automatically formats the data array in the JSON into a fme list which I can then explode and process. In doing this I've not had to provide any map of the JSON. i now need that JSON text to be accessed from a column in a database table. I have therefore a SQL Server reader to read that table and have experimented with JSON flattener and fragmenter to push the attribute into. Neither seem to give the required result and I'm thinking that to use either I need to describe the entire file in some way which is not practical.

i've tried a couple of the examples that others have provided and I cannot see any yet that give me what the JSON reader provides.

Help!

Rob