Hi FME Community

We have a dynamic FME workbench which iterates through 89 datasets in an Oracle Database and inserts all records into datasets in a SQL Database. Some of the datasets are large (over 4 million records) and the whole process for truncate & load can take over 24 hours to run. We are looking for ways to improve the speed of this process.

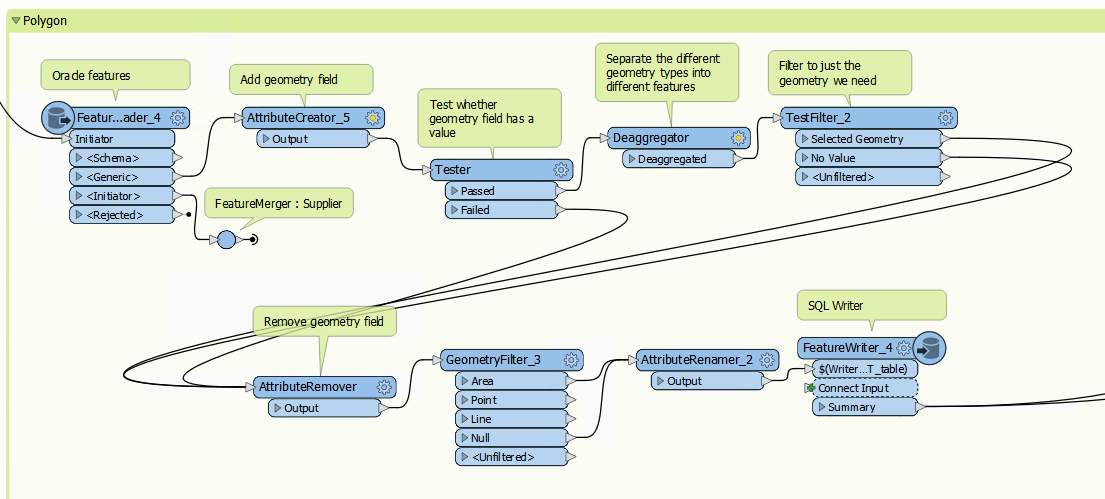

We are currently using a feature reader to get the Oracle features, then using a few transformers to clean up the data (i.e. in case there are multiple geometries), then using a feature writer to insert the records into the SQL database.

How can we speed up and/or optimize this process? Is there a way to use a SQL Executor to improve the process?