Hi All,

I'm trying to create a workflow that iterates through a folder which contains individual GDBs (e.g. 20 individual GDBs) and then merges the Feature Classes with the same name into a new blank GDB.

Any idea how to make that happen?

Thanks 🙂

Hi All,

I'm trying to create a workflow that iterates through a folder which contains individual GDBs (e.g. 20 individual GDBs) and then merges the Feature Classes with the same name into a new blank GDB.

Any idea how to make that happen?

Thanks 🙂

Best answer by takashi

Hi @galigis ,

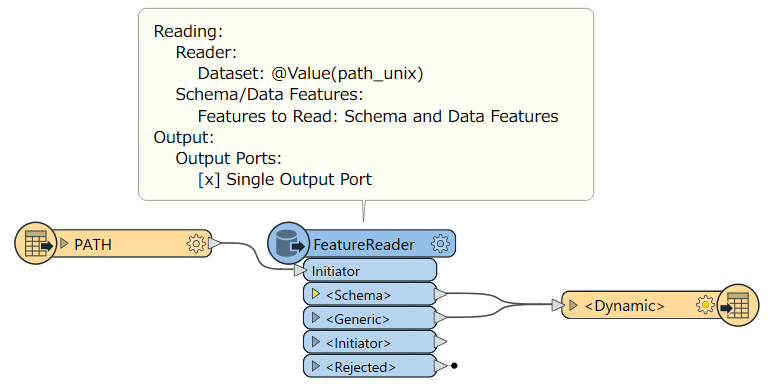

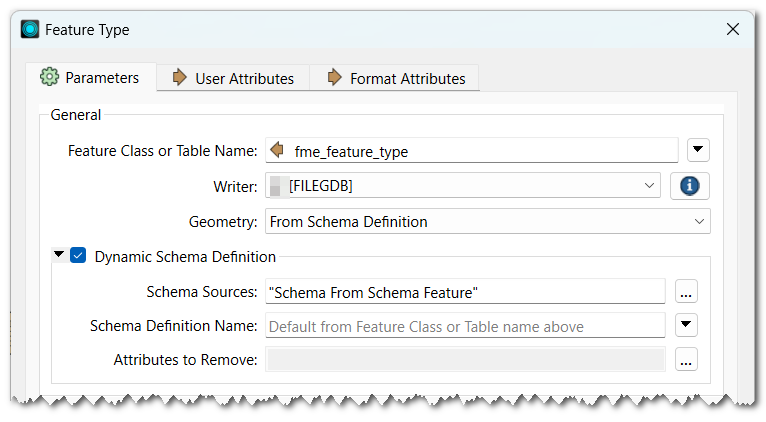

You can read all the *.gdb folder paths from the folder with Directory and File Pathnames reader (PATH), read all the features from the gdb datasets with FeatureReader, and write them into a single gdb dataset with a gdb writer using Dynamic Schema setting. Send the schema features output from the <Schema> port of the FeatureReader to the destination writer as schema definition features.

The workflow looks like this.