I have a csv file looking as below

ATT1 ATT2 ATT3 New Attribute Fri Jun 24 08:01:00 2016 1.46676E+12 A Sat Jun 25 08:01:00 2016 1.46684E+12 A Thu Jul 07 08:01:00 2016 1.46788E+12 A Fri Jul 08 08:01:00 2016 1.46796E+12 A Sat Jul 09 08:01:00 2016 1.46805E+12 A Sun Jul 10 08:01:00 2016 1.46814E+12 BMon Jul 11 08:01:00 2016 1.46822E+12 B

Sat Sep 24 08:01:00 2016 1.4747E+12 B

Sun Sep 25 08:01:00 2016 1.47479E+12 B

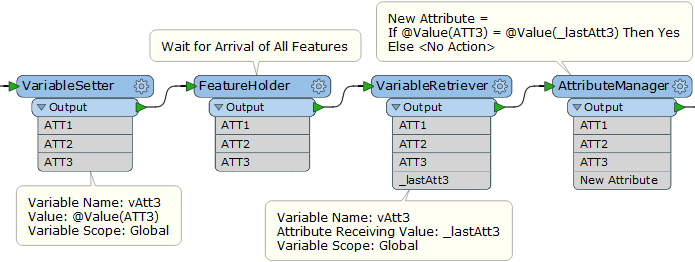

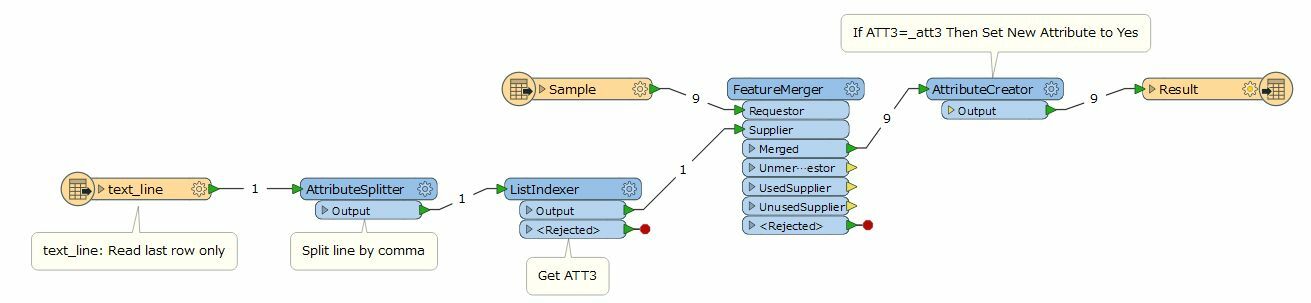

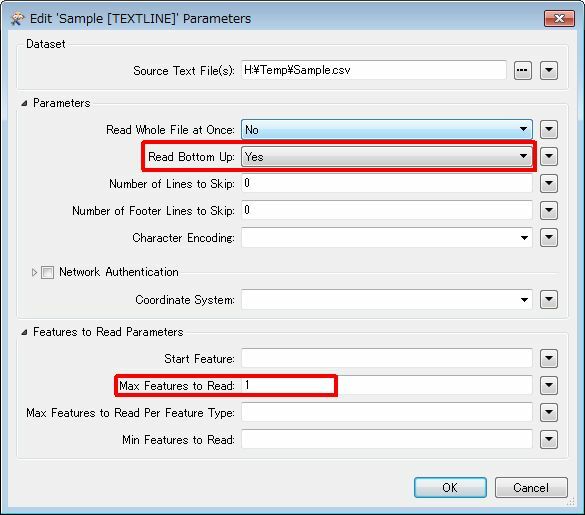

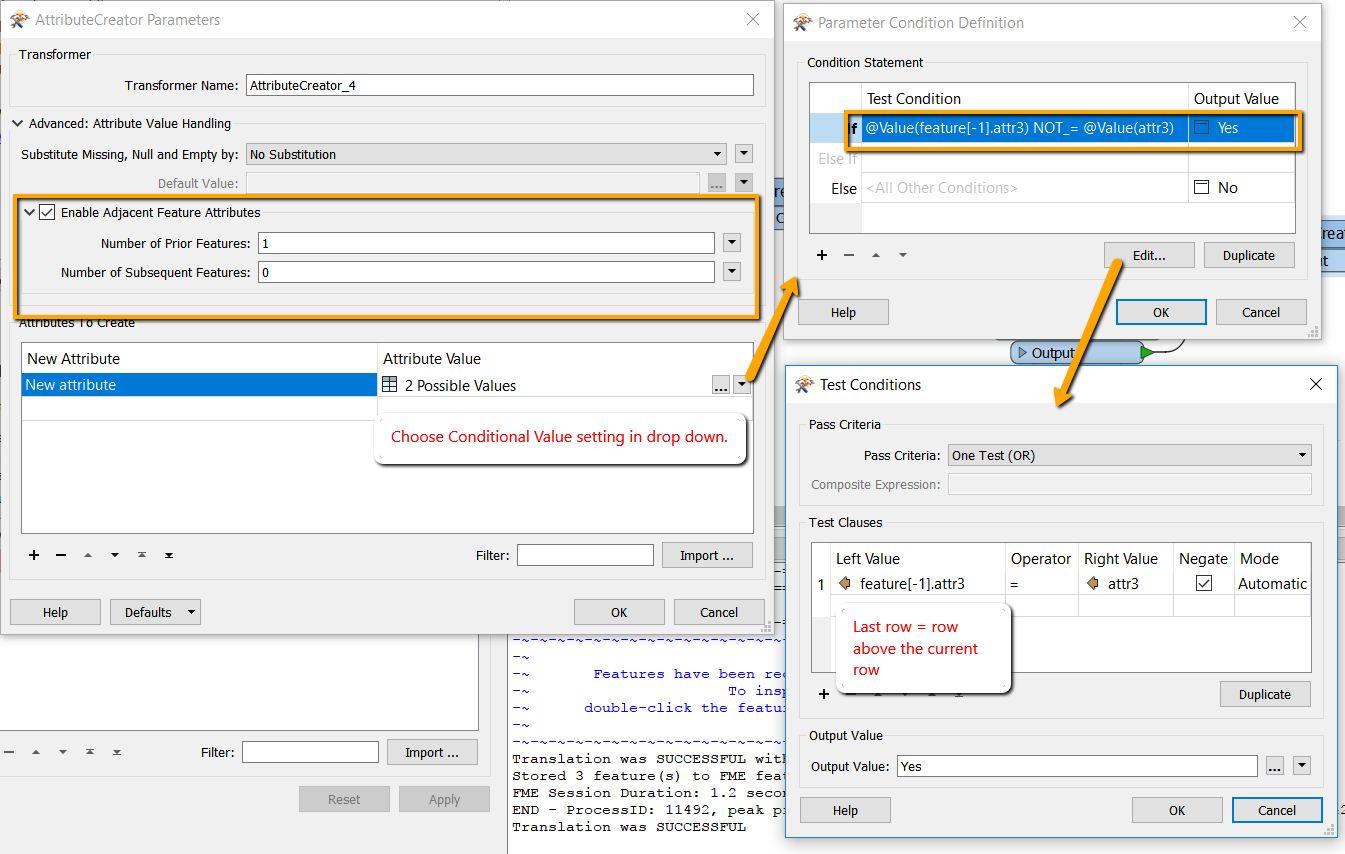

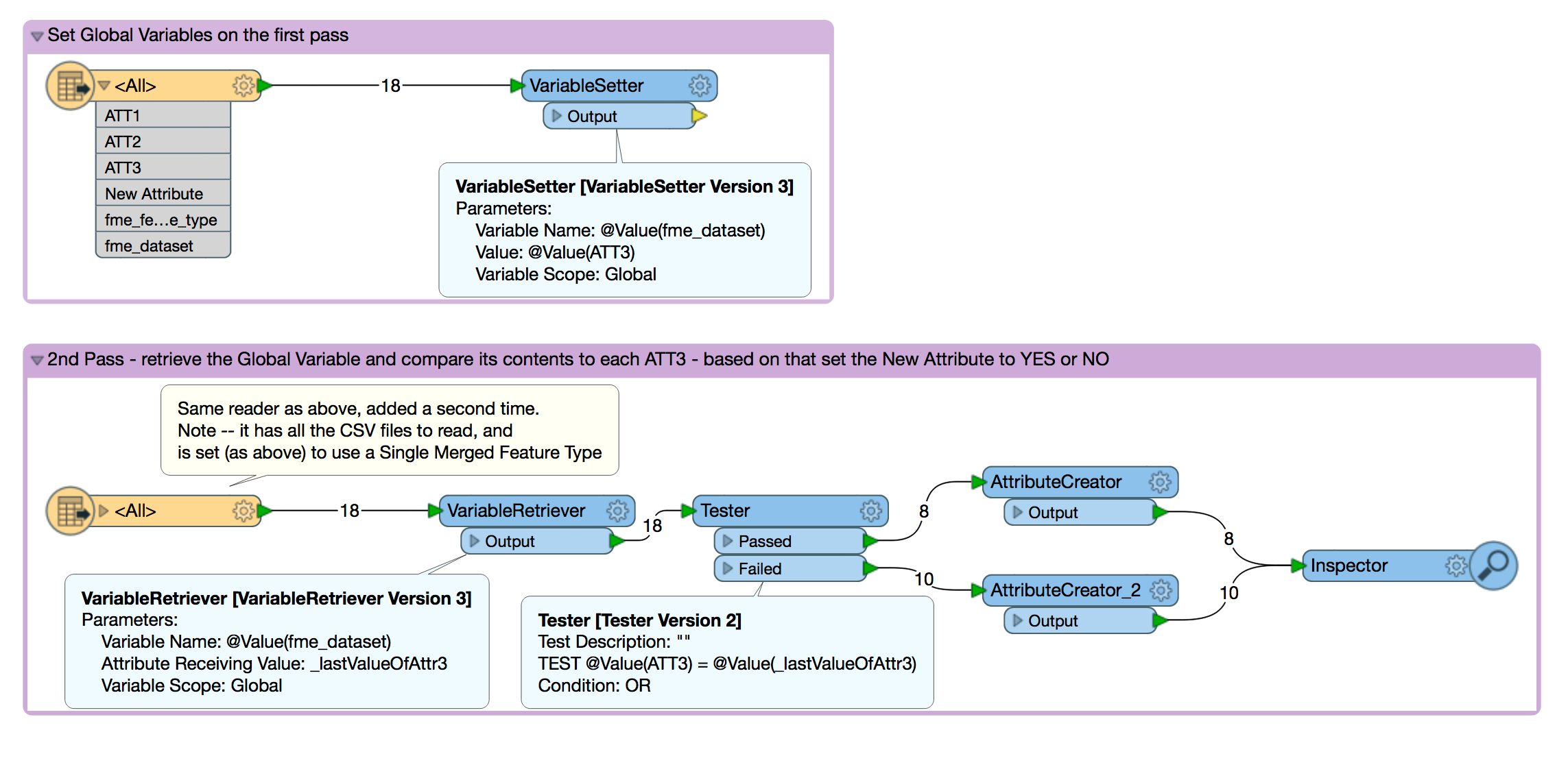

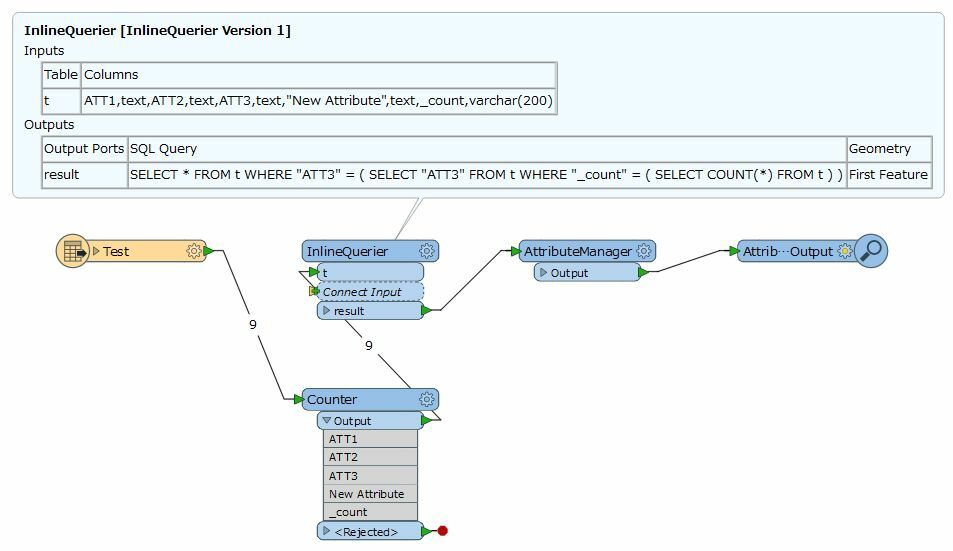

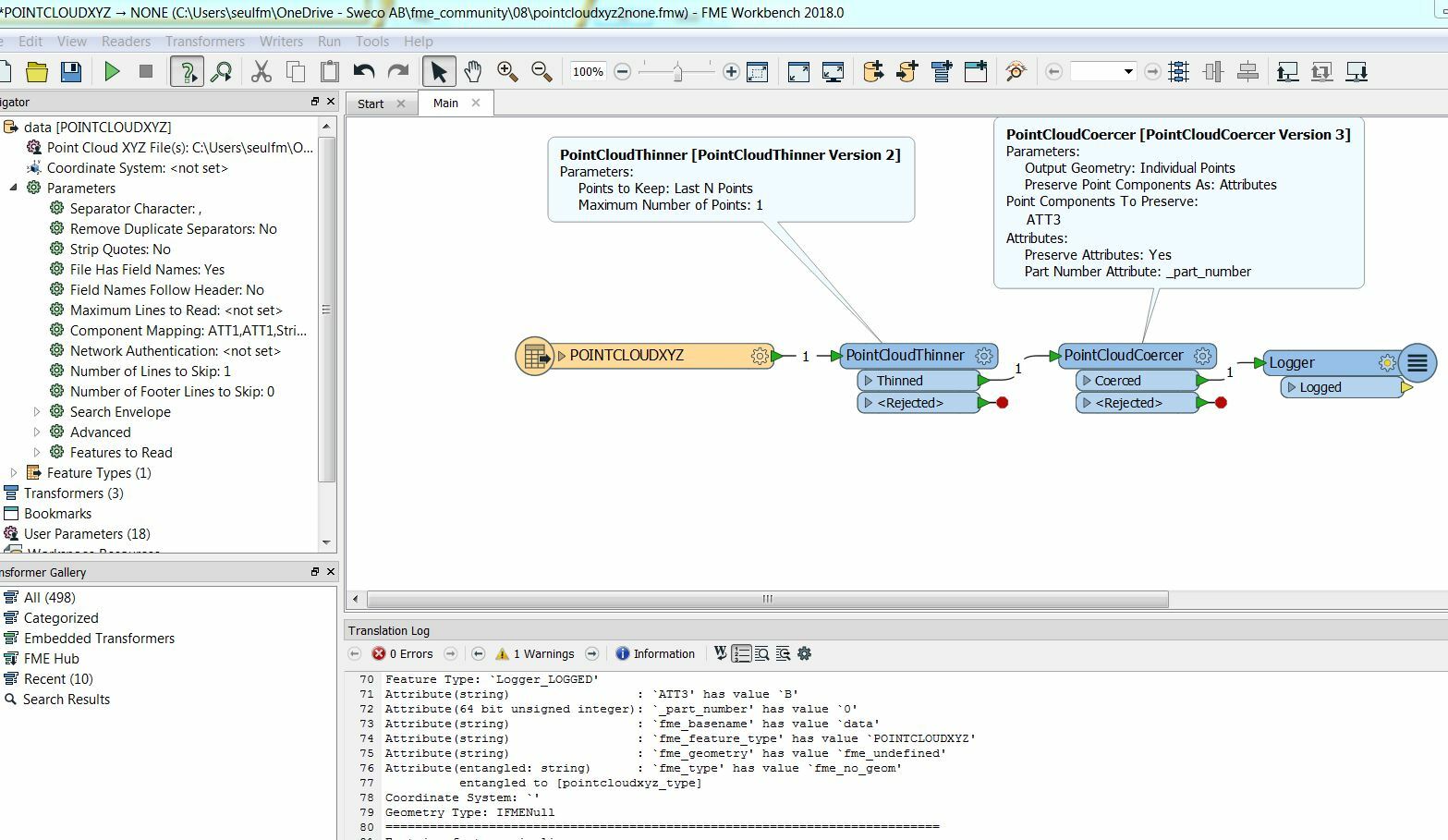

I want to set the "New Attribute" to 'Yes' based on the "ATT3" value of the last row.

Or i want to filter the records based on the ATT3 value of the last row.