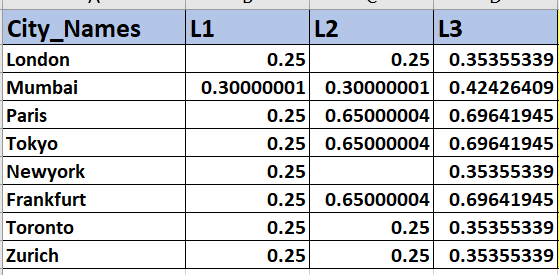

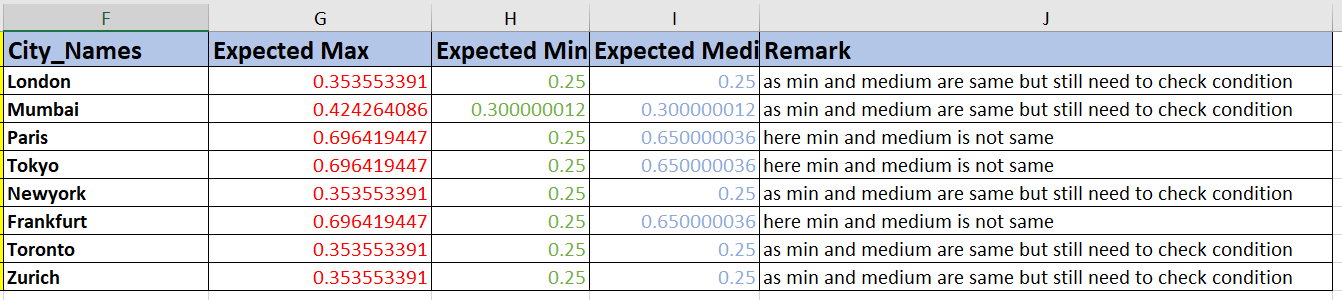

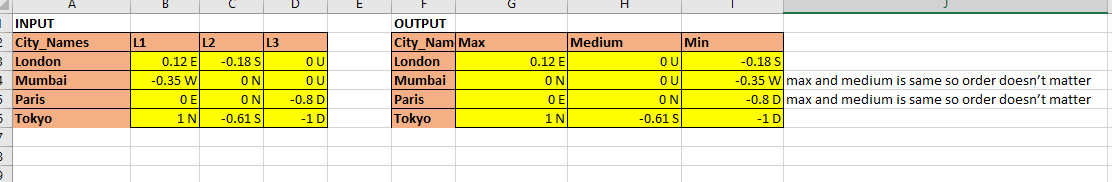

I have got data as below

AssetID X Y Z

A. 0 0 1

B. 1 0 0

C. 0 1 0

D. 1 0 1

E. 4 5. 6

F. 9. 8. 7

G. 6. 9. 5

am able to extract

max of each row

min of each row

how to obtain medium of each row as simple as possible ?

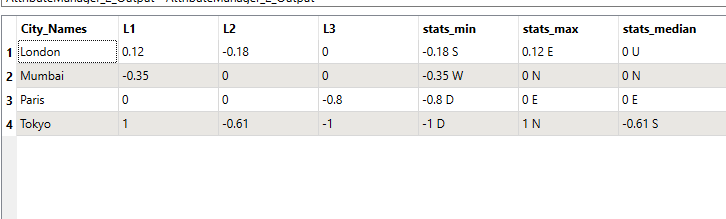

am using

@max ( value of x , value of y, value z)

@min ( value of x , value of y, value z)

medium ?????( plz note it’s not average or median of 3 value ) It’s exactly absolutely value the same as it is in excel files.