Hello.

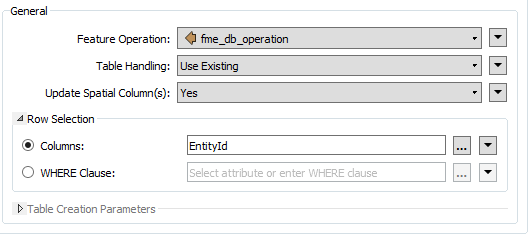

Currently I'm using the FeatureWriter to update my file geodatabase. It's running at a rate of 18 features per minute, and is comparing 13,998 features against 1.2 million in the dataset being updated.

I'm running a test of this process on a group of features which is a good representation of what a typical update will be in terms of feature quantity.

Is there any way to speed this up?

Thanks.