Hello everybody,

I hope this is a the right place for a FME Realize question as this is about mobile apps (or is there a Realize subforum I missed?) otherwise please don’t hesitate to move this thread.

I am just starting out with FME Realize and I noticed some weird behaviour and was wondering if this is only because of GPS position fluctuations or because of something else.

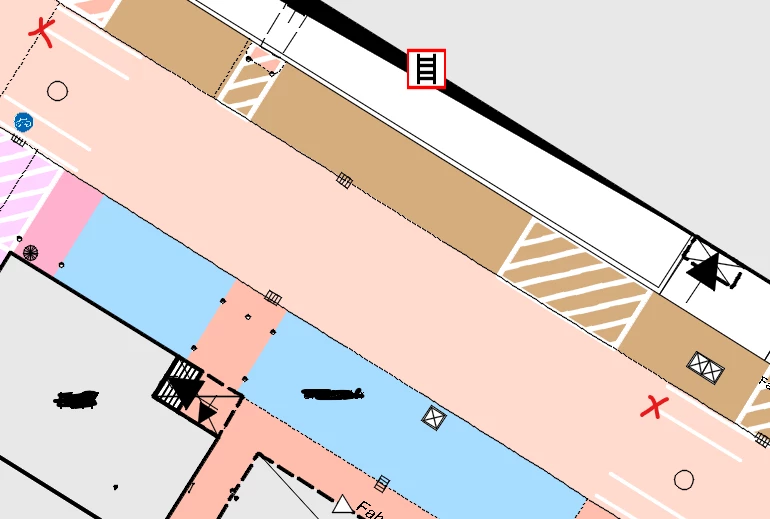

I placed spheres at these two points:

When I place one via rotating/moving and lock the model the other one is quite a bit offset.

I doubt it is because of my data, as it is measured by a trusted surveyor.

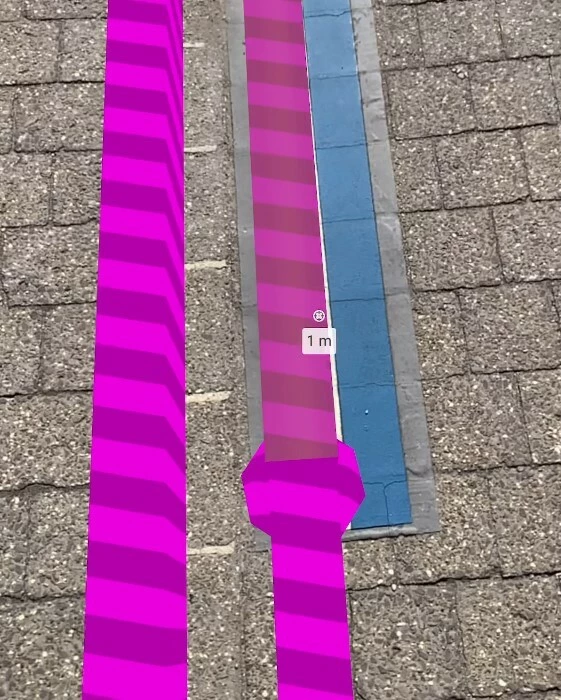

When I now walk over to the point where the other sphere is located it looks like this:

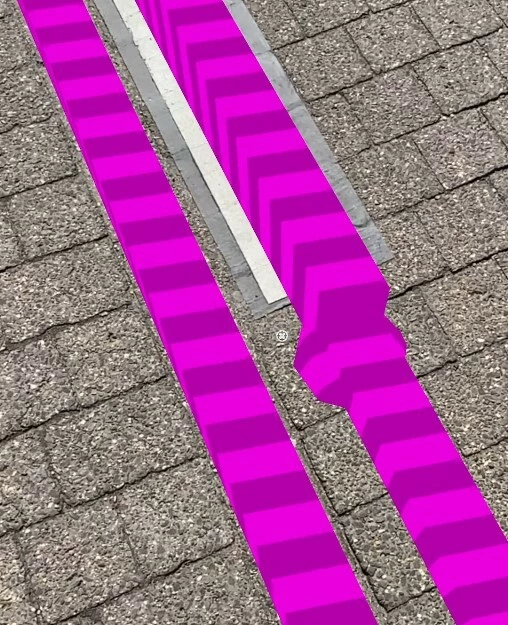

Weirdly when I now go back to the first sphere it is also offset:

I tried it with several coordinate systems and which anchor/georef.anchor on and off, but it is never correct. At the moment I am in scalefree UTM.ETRS89 - EPSG25832, though that shouldnt matter as my area is only around 50m.

Also: even though the model is locked it does weird jumps from time to time

Anyone else noticed something like this before or has suggestions what I could test?

It feels like once I move more than 3 meters it starts to offset. Though it is weird that the spheres are offset in just one direction and not completely off as I would suspect because of GPS.