I'm able to setup and use "OpenAICompletionsConnector" succesfully with text-davinci-003, but since the completions API models will be deprecated at the begging of the year 2024 I wanted to try Chat completions with gpt-3.5-turbo as described here.

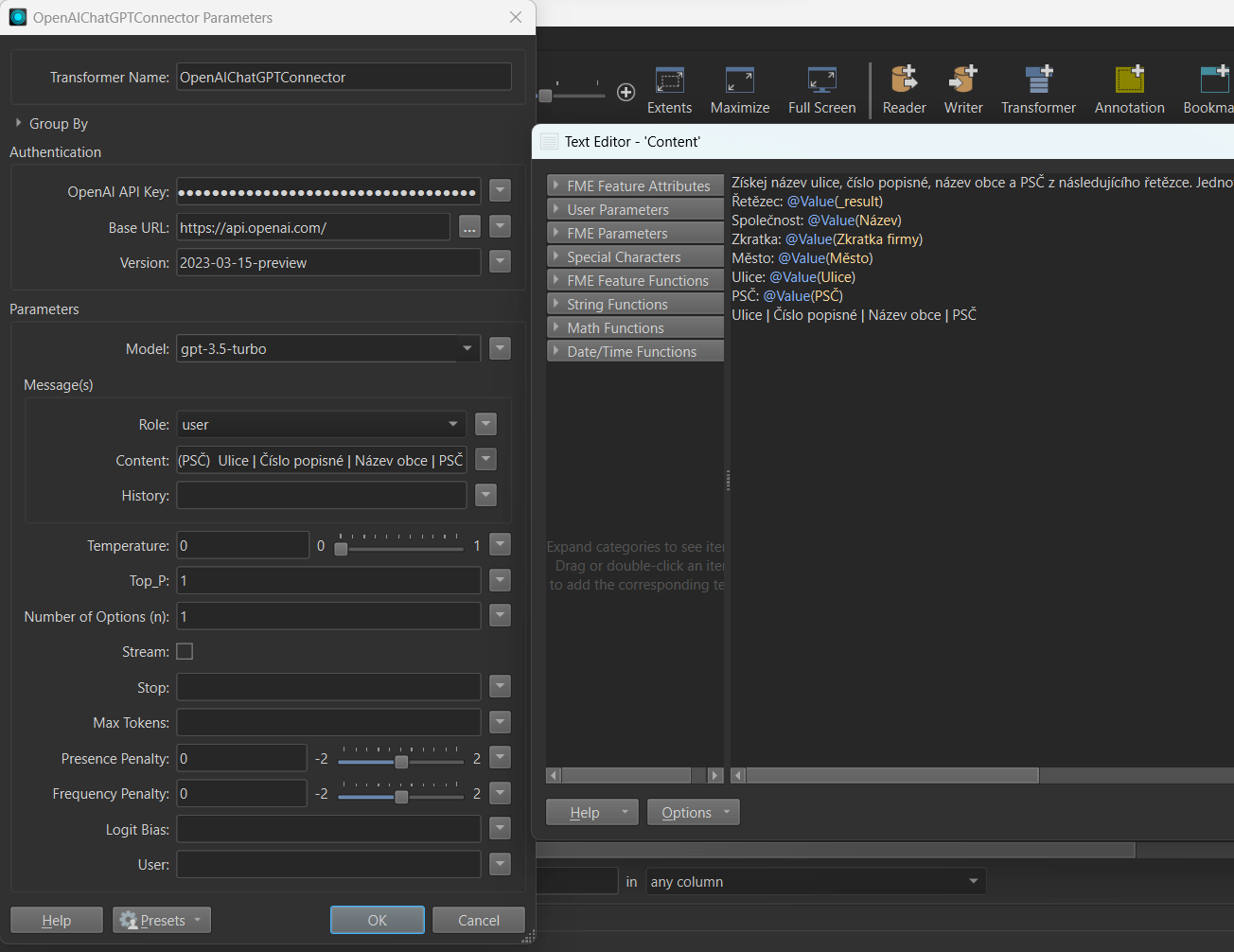

I understood that is hould use another transformer called "OpenAIChatGPTConnector". I use role "user " and as "content" I use the same prompt as for Completion to resolve not well-structured addresses. With this setup the Connector somehow aggregates all the features from input port and sends them as one prompt with only one result. I would need to resolve each row separately, i.e. send the whole prompt for every feature or if the chat works with history, send just one prompt and the deatils for every row (feature). Important is that I need result for every row (feature).

Any ideas for the setup?

Thanks