Here is my use case:

I have created a workflow that works well and can handle multiple files. However, when I publish this workflow to FME Flow, I have the following problem when I copy and paste multiple files at once:

- Copy and paste two files at once.

- The resource or network directory sees the change and triggers the same workflow twice (see https://community.safe.com/s/article/Do-the-Directory-modified-Automation-triggers-support-single-events-for-multiple-file-datasets)

- The first workflow runs fine and writes all my data to a database

- The second workflow (which is the same, just triggered a few milliseconds later) fails because of a "UNIQE KEY constraint violation".

This is a bit annoying and I am looking for another solution. Maybe if I can run the workflow file by file. But how is this possible with FME Flow?

Does anyone have a recommendation on how to solve this problem?

*****************

Update, 2023-10-26

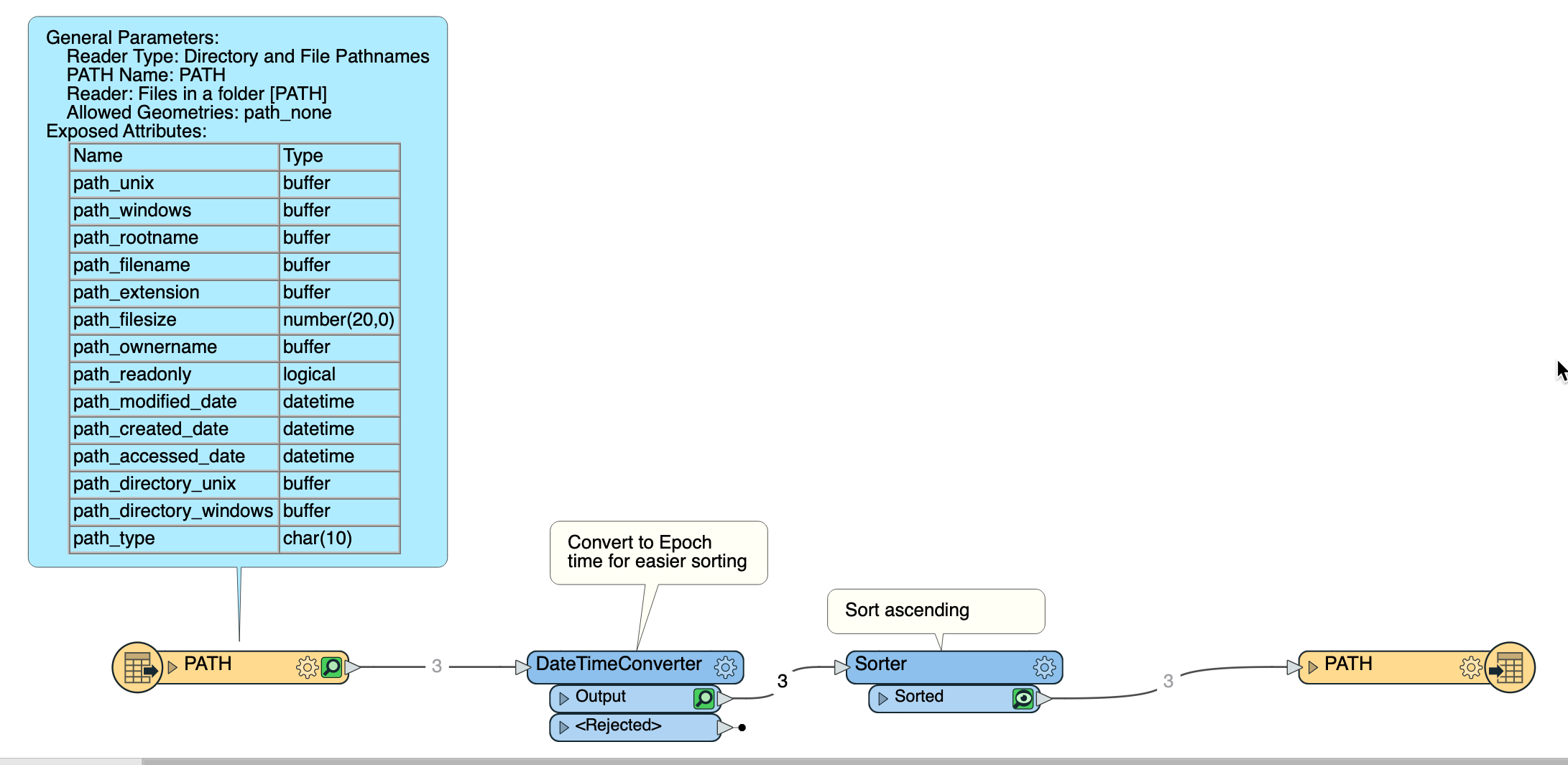

How to proceed, if FME Flow should process the files in a defined order? I would like that FME starts with the oldest file first, then the second oldest and so on. E.g. I copy 4 files at the same time in a folder and right now FME just picks the files randomly (not from latest to oldest or the other way round).

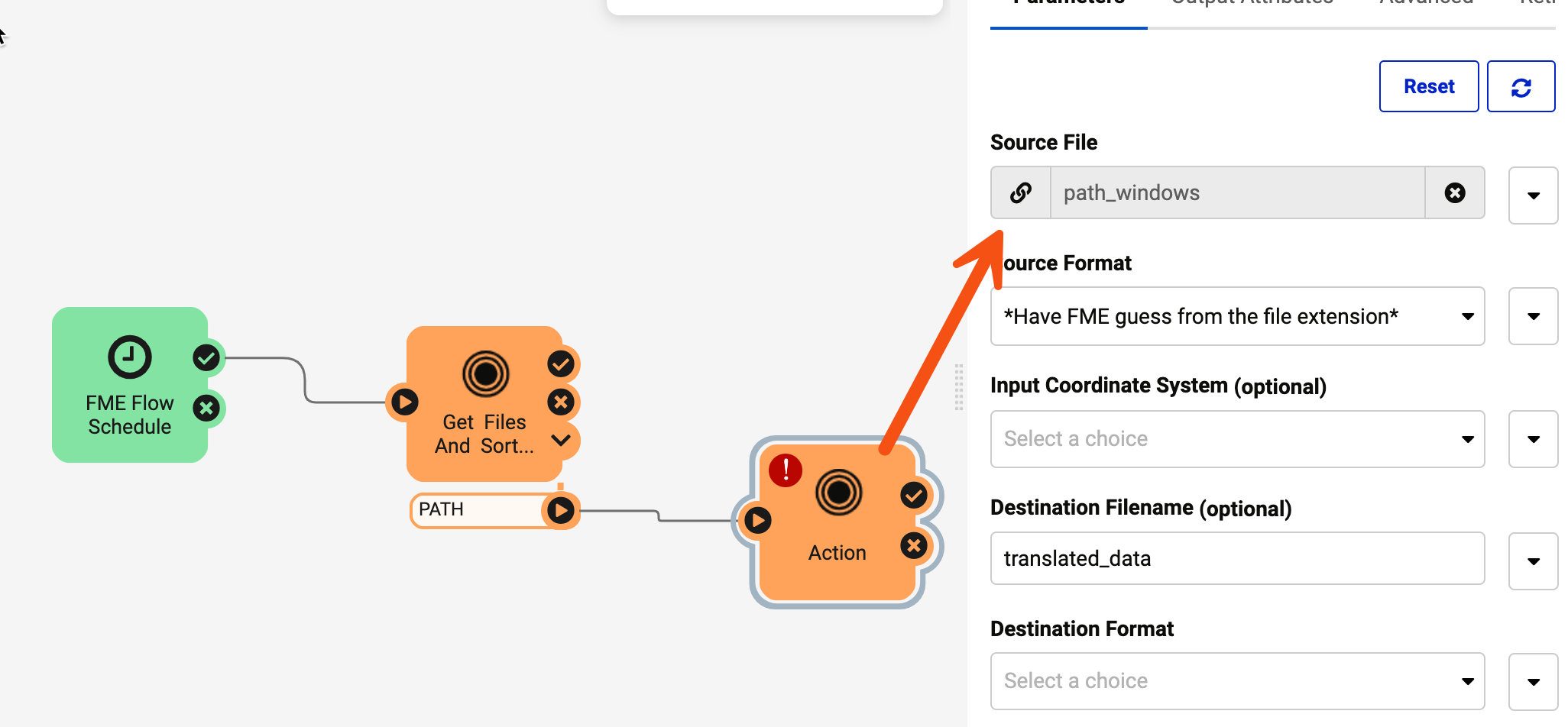

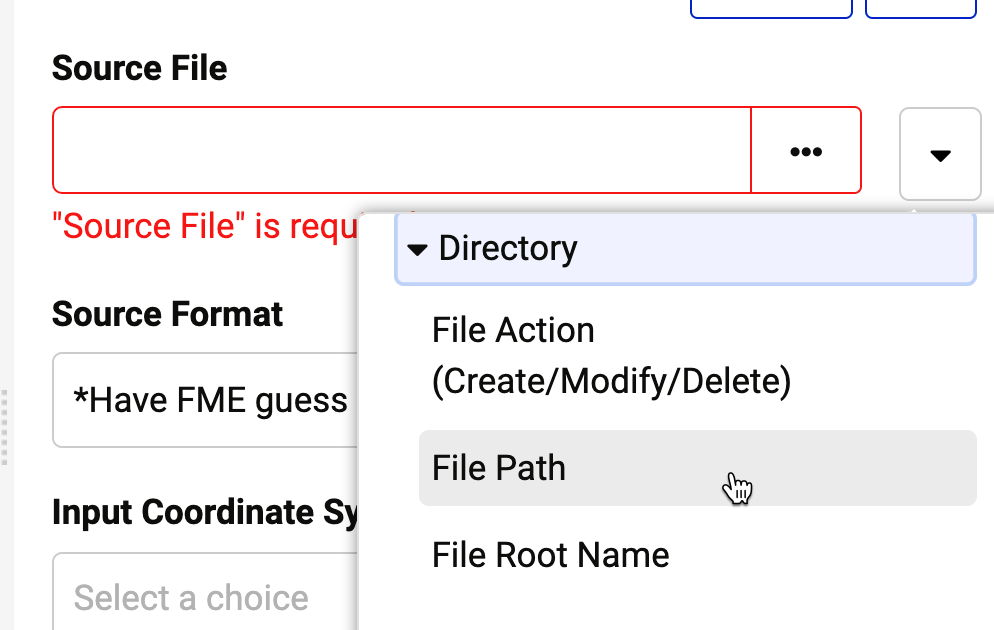

This will sort your files by the time they arrive and the Automations writer can be used to pass the filenames into the following workspace through the automation.

This will sort your files by the time they arrive and the Automations writer can be used to pass the filenames into the following workspace through the automation.