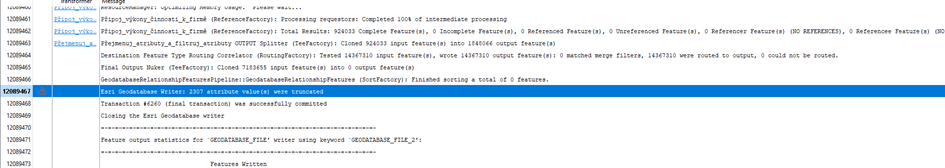

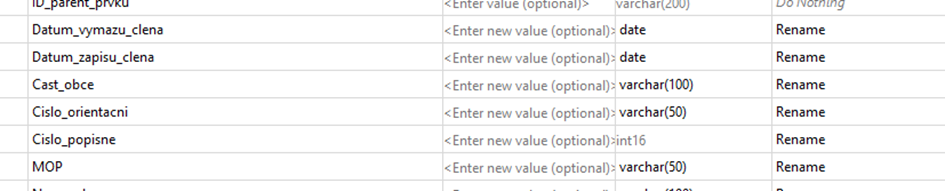

I want to write to an existing FGDB and I'm getting "Esri Geodatabase Writer: XXX attribute value(s) were truncated" (in my case: XXX = 500+) in my translation log. Concerning the attribute values that should get written and given data types, everything seems to be fine. What could be a way to determine which attribute values are truncated and why? I can't find further information on this.