Hey FME'ers. I've not been online for a bit recently (because of updating the FME training materials for 2017) but I wanted to throw out a new idea that is part challenge, but part crowdsourcing.

I came up with the idea of an FME-driven service for assessing a workspace for best practices. Just now I found I wasn't the first to think of this, which shows there aren't any new ideas any more!

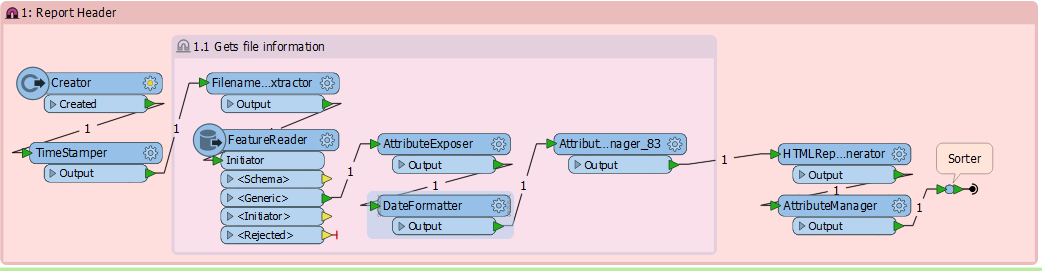

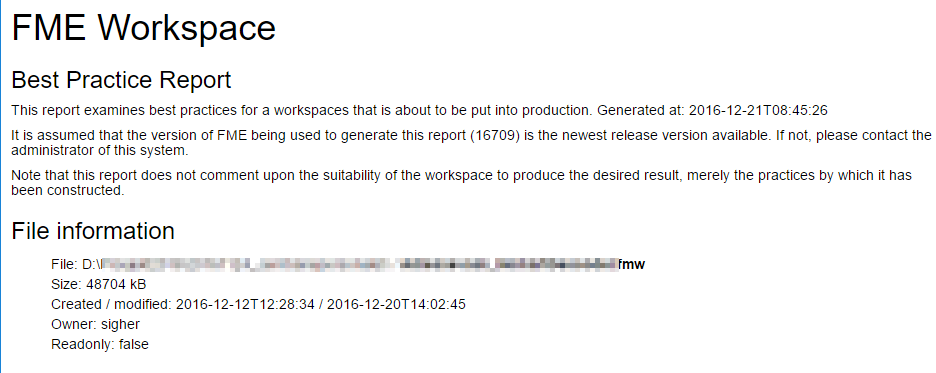

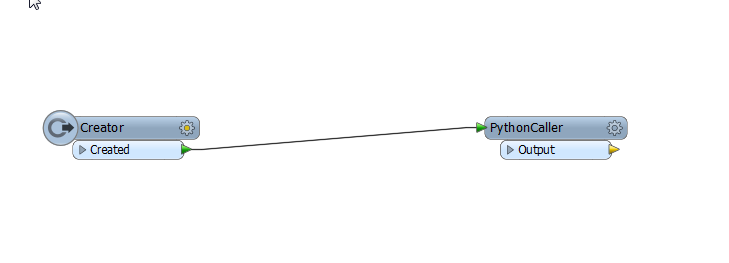

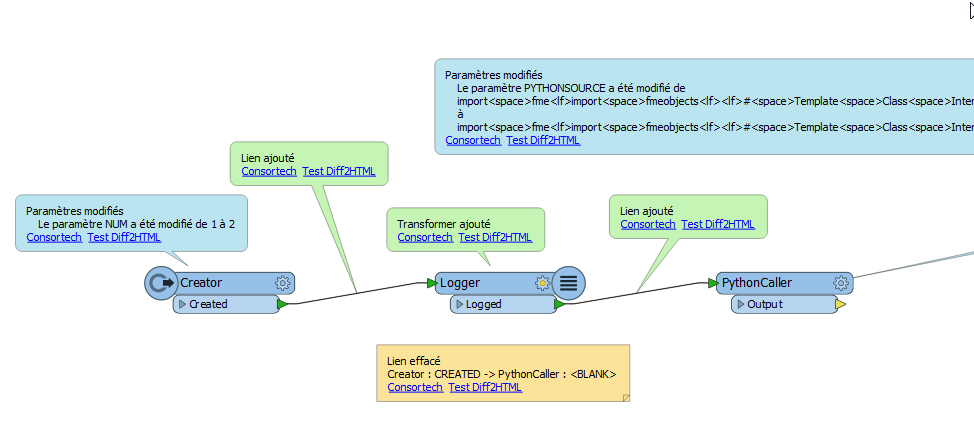

Anyway, in my spare time I've been putting together a workspace using the FMW reader to test other workspaces for best practice. I've built about 20 different tests and can think of quite a few more. But I thought it would be great if we - the FME community - could work together to take this idea to completion.

So, this is an open invite to take part in this project. I think there are a number of different ways you could contribute:

- Develop a best practices test and add it to the project workspace

- Do some testing on the project workspace to look for faults or enhancements

- Improve the output style (it's currently fairly basic HTML)

- Suggest some ideas for tests that we haven't yet thought of or implemented

- Use the project to assess your own workspaces - and let us know what you think

I've shared all the files in a folder on Dropbox (now find it on GitHub instead). Anyone in the FME community is welcome to access this and use the contents for whatever you like.

If you want to contribute a test, then try to get a feel for the workspace style, pick a test that isn't done, and go for it. I haven't done any tests around transformers yet, so there is a lot still to do. And there may be reader/writer tests I haven't thought of. Preferably make a copy of the workspace, since we don't have proper revision control (yet). I'd like to keep it in 2016.1 or earlier for the moment, so no-one has to install a beta version.

I'm also open to any and all other ideas about how to go about this, and how to collaborate on a project like this. As far as I know, there's never been a crowdsourced FME project before!

My end goal is to get this online and hosted in FME Cloud, so we can make a proper web service out of it. My idea is that everyone who contributes would get recognition on the web page (and a custom KnowledgeCentre badge of course)!

So, let me know what you think - and if you want to contribute then please do so.

Mark