Hi.

I just had a job on our FME server 2022.2.2 go haywire.

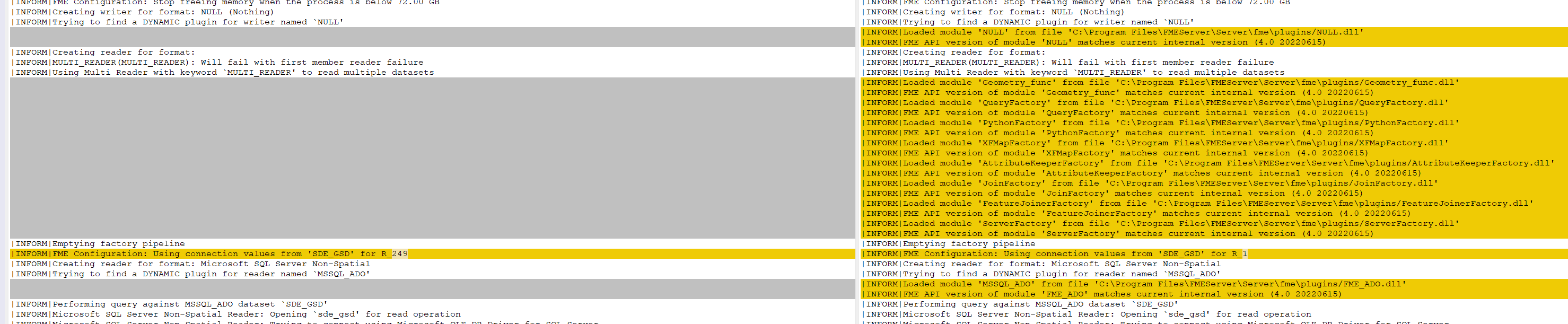

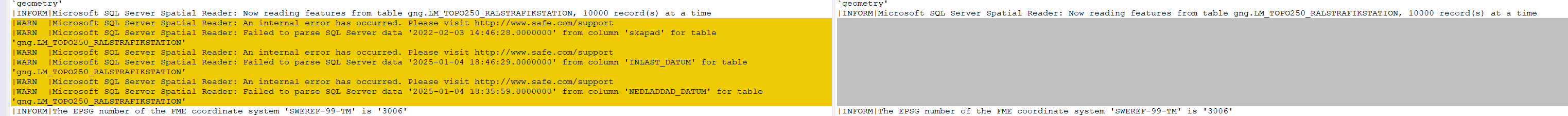

When reading from one or more database tables, it suddenly spews out a _lot_ of error messages, repeating the same two lines from line 319 to line 865997 (on page 1734):

Microsoft SQL Server Spatial Reader: An internal error has occurred. Please visit http://www.safe.com/support

Microsoft SQL Server Spatial Reader: Failed to parse datetime, attribute set as nullWhat gives ? Should I be concerned ?

I've occasionally encountered huge log files before, but haven't had any idea why. Next time I'll see if it's the same issue.

The job took several minutes (as opposed to normally a handful of seconds) to finish, which is not good.

Cheers.