Does anyone know if it is possible to clip a point cloud to a boundary when reading into a workspace? Often I have the need to only work with part of a point cloud file and my full files are frequently up to 10GB (in las format), though usually smaller. I'm also reading up to 100 similarly sized files at once

Basically, I would like to read only the section of a point cloud that's in a boundary to save on time/computing power/memory/etc. If this isn't possible would I be able to thin instead when I read to load less data? I'd like to avoid having to use an external program to clip/thin first if at all possible. I already do this

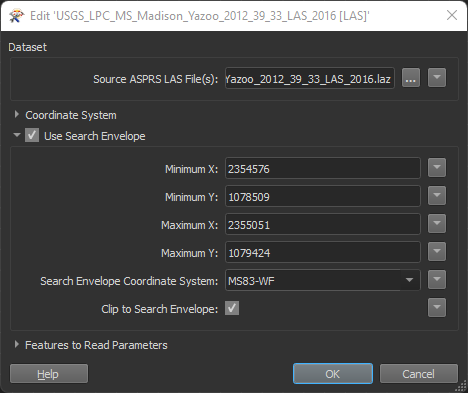

You can also chose to use a FeatureReader instead of a "classic" reader so you can have the extents of the envelope set via attributes created beforehand.

You can also chose to use a FeatureReader instead of a "classic" reader so you can have the extents of the envelope set via attributes created beforehand.