Hi, I asked about parquet format yesterday and I had great feedback about my problem (https://community.safe.com/s/feed/0D54Q00008VE8CZSA1)

Right now I have a bigger problem - but I think, I have too small knowledge on this topic.

I have to connect to azure blob storage (datalake) based on SAS auth, I have permission into SAS key like:

ss=bfqt

srt=sco

sp=rl

So, I can create a list and I can read files, also I have SignedResourceTypes like Container, Service, and Object.

With parameters like that I see db, I see parquet files, also I can download files via Chrome (link+SAS)

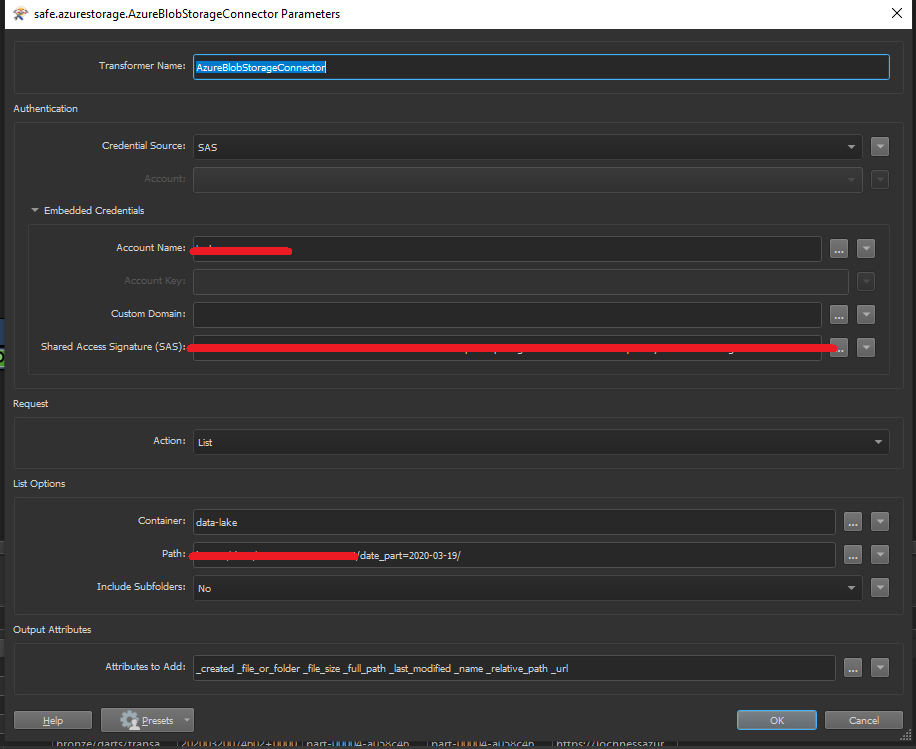

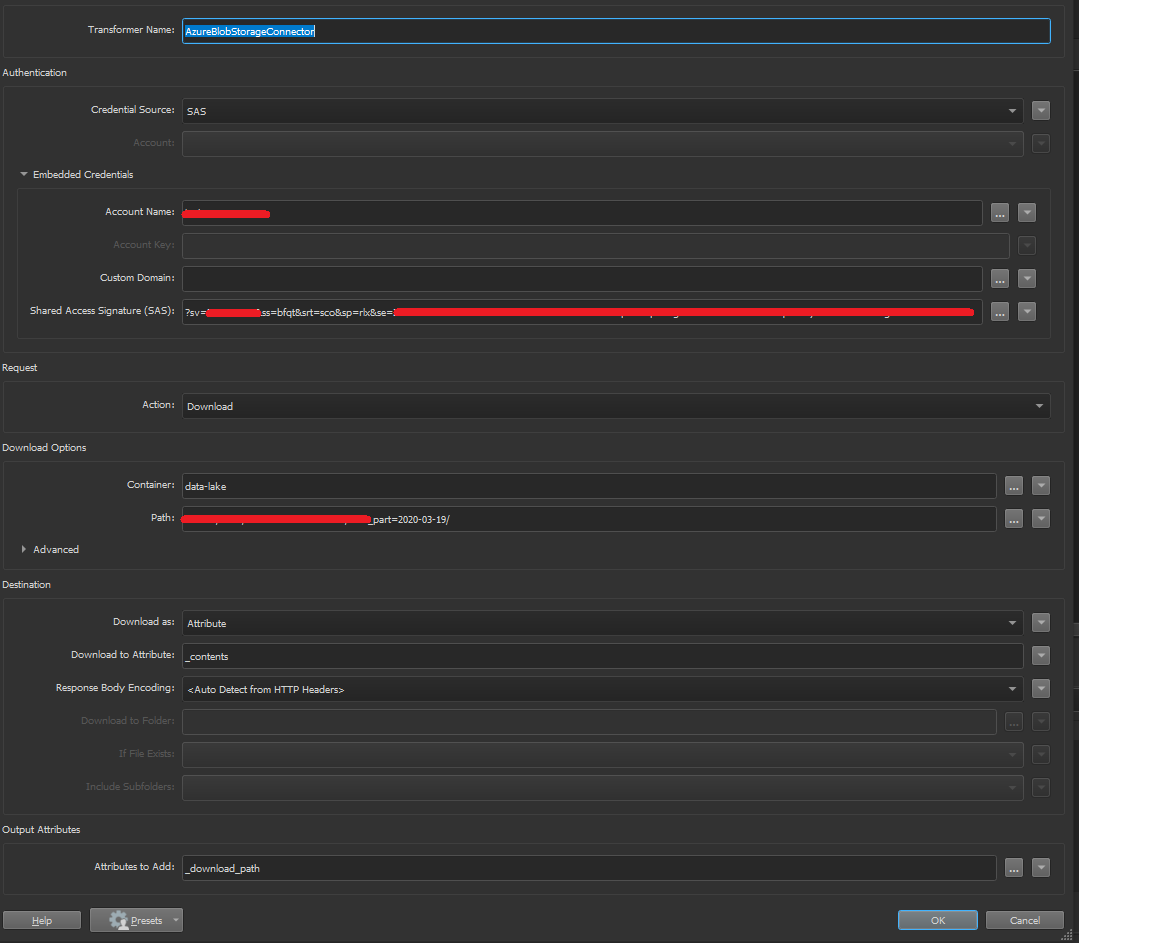

But if I want to get attributes (and only it, without download files, because data are too big) - I used this kind of parameters:

But if I want to get attributes (and only it, without download files, because data are too big) - I used this kind of parameters:

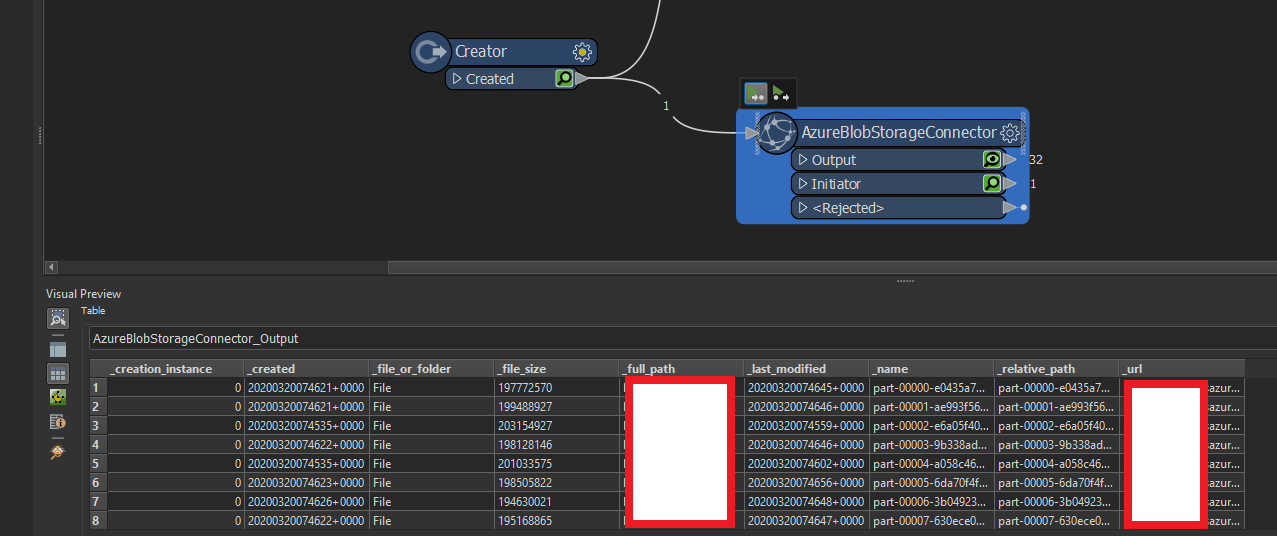

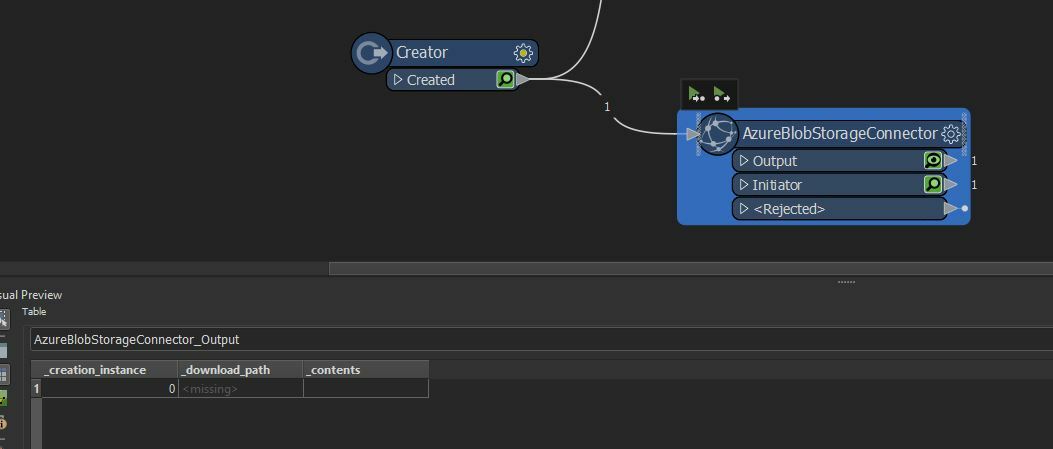

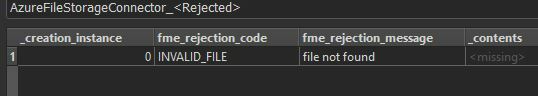

and I have empty results:

and I have empty results:

My question:

My question:

Is it possible to get only attribute per parquet file which is existing in azure?

Or downloading data is required?

What I have:

- SAS key, which I can "modify", but as I mentioned - I think, I have required permissions

- name container

- account name to SAS auth

What I want:

- aggregated by filename (like fme basename on new collumn) attributes from azure.

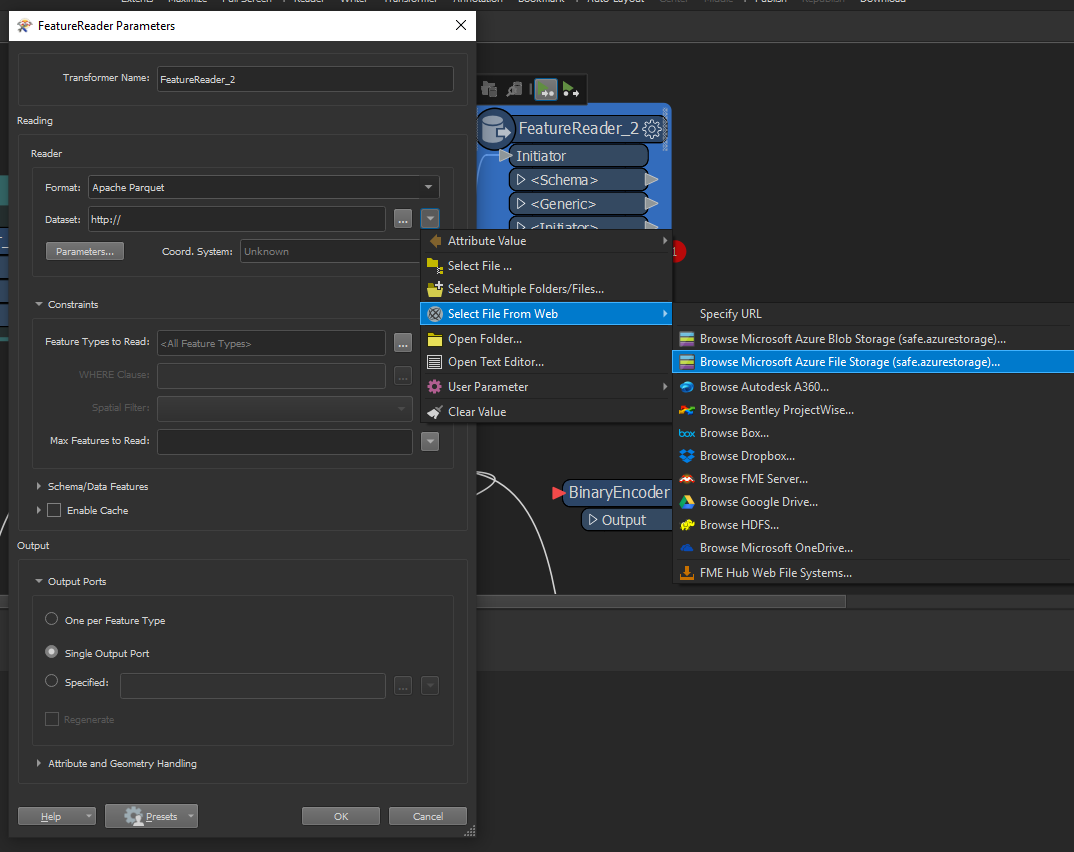

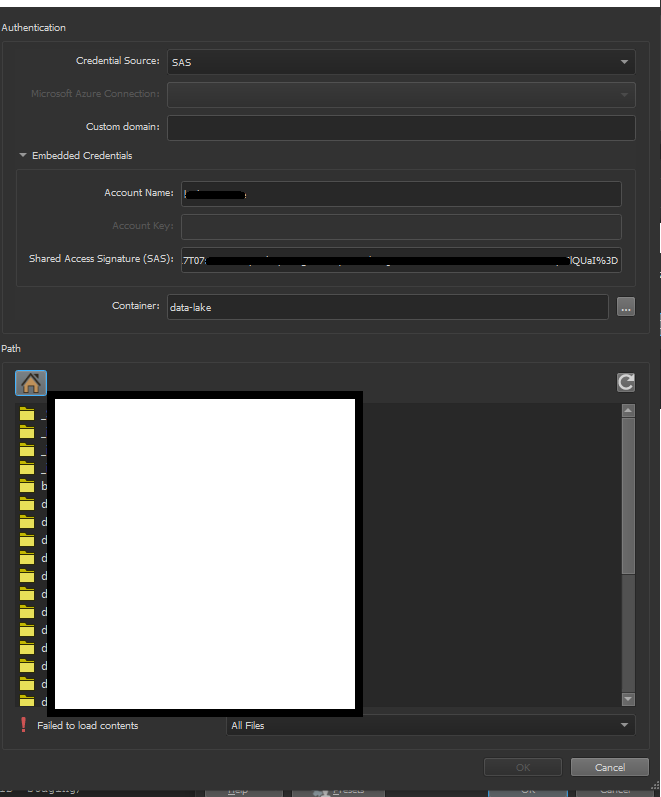

after configuration I see "tree" with folders:

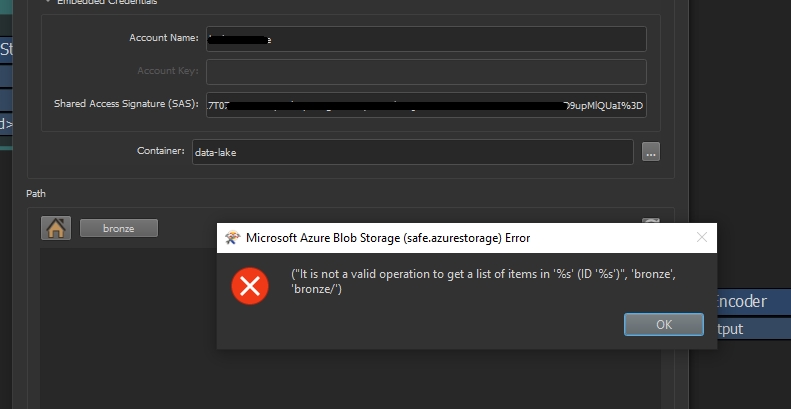

after configuration I see "tree" with folders: but if I want to open the folder which I want I have an error like this:

but if I want to open the folder which I want I have an error like this: Question: it will be a problem with:

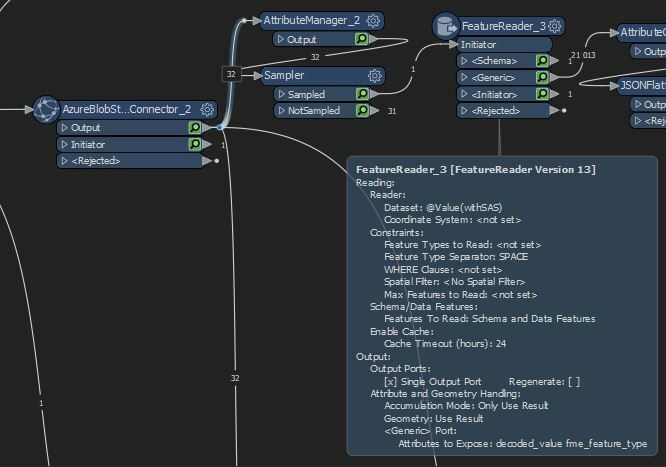

Question: it will be a problem with:  AZureBlob Connector takes me the link, after that into the reader I added a link from azure blob + I added SAS key - if I good understood logic - I don't download files to my local drive, but it reads in dynamic mode.

AZureBlob Connector takes me the link, after that into the reader I added a link from azure blob + I added SAS key - if I good understood logic - I don't download files to my local drive, but it reads in dynamic mode.