Hi,

I’m attempting to run a workspace on FME Flow Hosted from within another workspace with an HTTPCaller using a webhook URL.

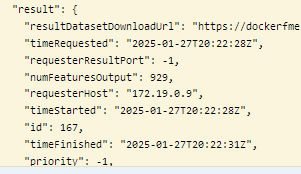

The child workspace will eventually return a URL link to a zip file as it uses the DataDownload service.

The workspace can take some time to run (> 5mins) as the data can be quite large.

My issue is that I want to run the job synchronously so I’m able to retrieve the URL within the parent script to send it within an email, but the HTTPCaller always times out if the child script takes longer than 300s to run even if I set the timeout values to anything over 300s or even 0 (wait indefinitely). It is successful if the child script runs quickly (<300s).

This is a problem as most instances will take longer than 300s.

I don’t understand why it times out if it goes over 300s even if I tell it to wait longer.

Is this a bug? If so, is there a work around or is it on the path to being fixed?

Thanks