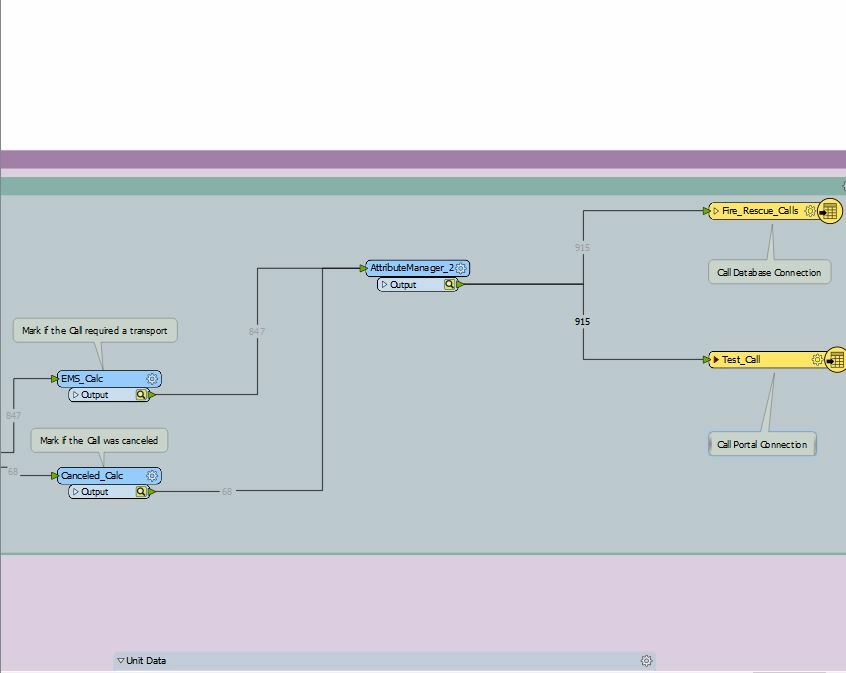

I am designing a workflow that will streamline our our 911 data to ESRI ArcPortal as well as a local geodatabase. the information fed in is from a SQL server and represents specific apparatus information as related to a particular call, and runs through some calculations for things like response time, time on call, etc.. I've gotten to the point where the data splits off in 2 streams one for apparatus data and another for unique call data compiled by merging apparatus information and aggregating the data by the Call Report number. Everything works fine to this point.

The initial run of this yielded over 600,000 features to be written since a dataset of call information didn't exist prior this was expected. This initial dataset was then uploaded to our portal service since the Portal writer appears to only insert, update or delete. I run the script daily to pull the previous days call information and when it got to the point that the data was going to be pushed to our portal I get an error that "Input feature does not have a global id". Understandable since the data would not have on assigned to it.

In short I am looking help getting the data posted to our portal and I'm not sure of the best approach to tackle this is.