I am trying to update approximately 10 attribute fields in a point layer in AGOL using FME. When I run the script, it keeps failing and I’m not sure where the hang-up is based on the warning and error messages I am getting. Below is what I keep getting once my script has failed, with the ones in red being the error messages (the last two bullet points):

- Feature Caching is ON

- The workspace may run slower because features are being output and recorded on all output ports regardless of whether or not there is a connection.

- ArcGIS Online Feature Service Reader: Reading from from feature service 'https://services1.arcgis.com/9yy6msODkIBzkUXU/arcgis/rest/services/HII_2020_2022/FeatureServer' using filters defined in 'HII_2020_2022'

- ArcGIS Online Feature Service Writer: Feature Service 'HII_2020_2022' does not support geometry updates. If features contain geometry, the geometry will be ignored

- ArcGIS Online Feature Service Writer: Attribute 'OBJECTID' on feature type 'HII_2020_2022' is marked as read-only in the corresponding layer/table. Attribute values will be ignored

- ArcGIS Online Feature Service Writer: 3000 features successfully written to 'HII_2020_2022', but the server rejected the 1000 features in the last request due to errors. See warnings above. Ending translation

- ARCGISONLINEFEATURES writer: An error has occurred. Check the logfile above for details

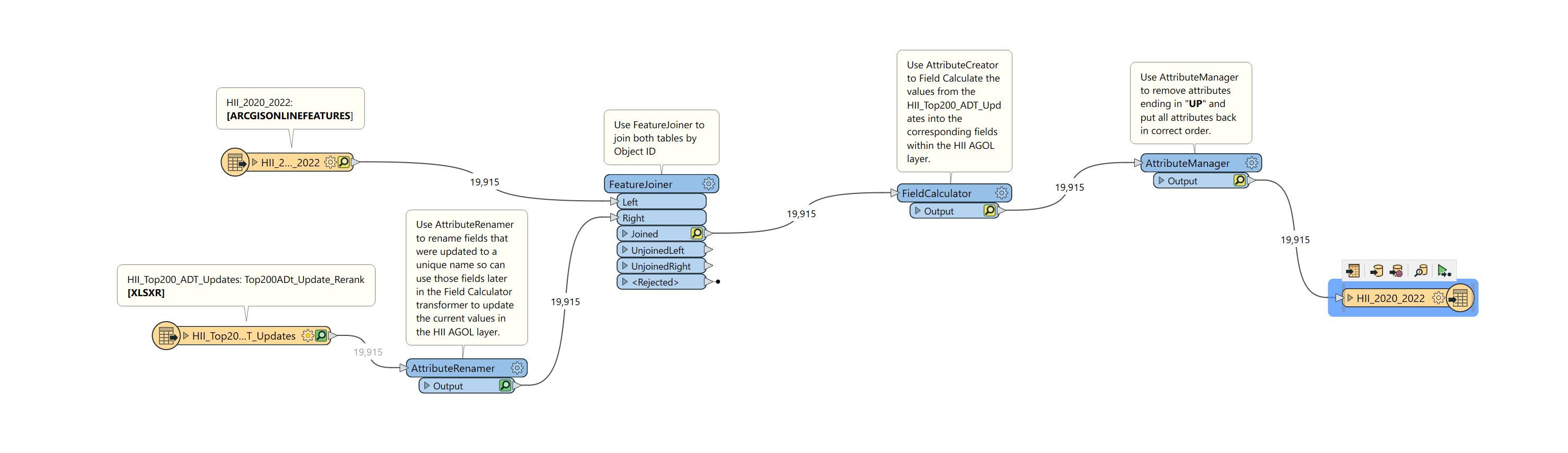

I’m new to FME and have never tried to use it to update an AGOL layer. This is what my FME script looks like:

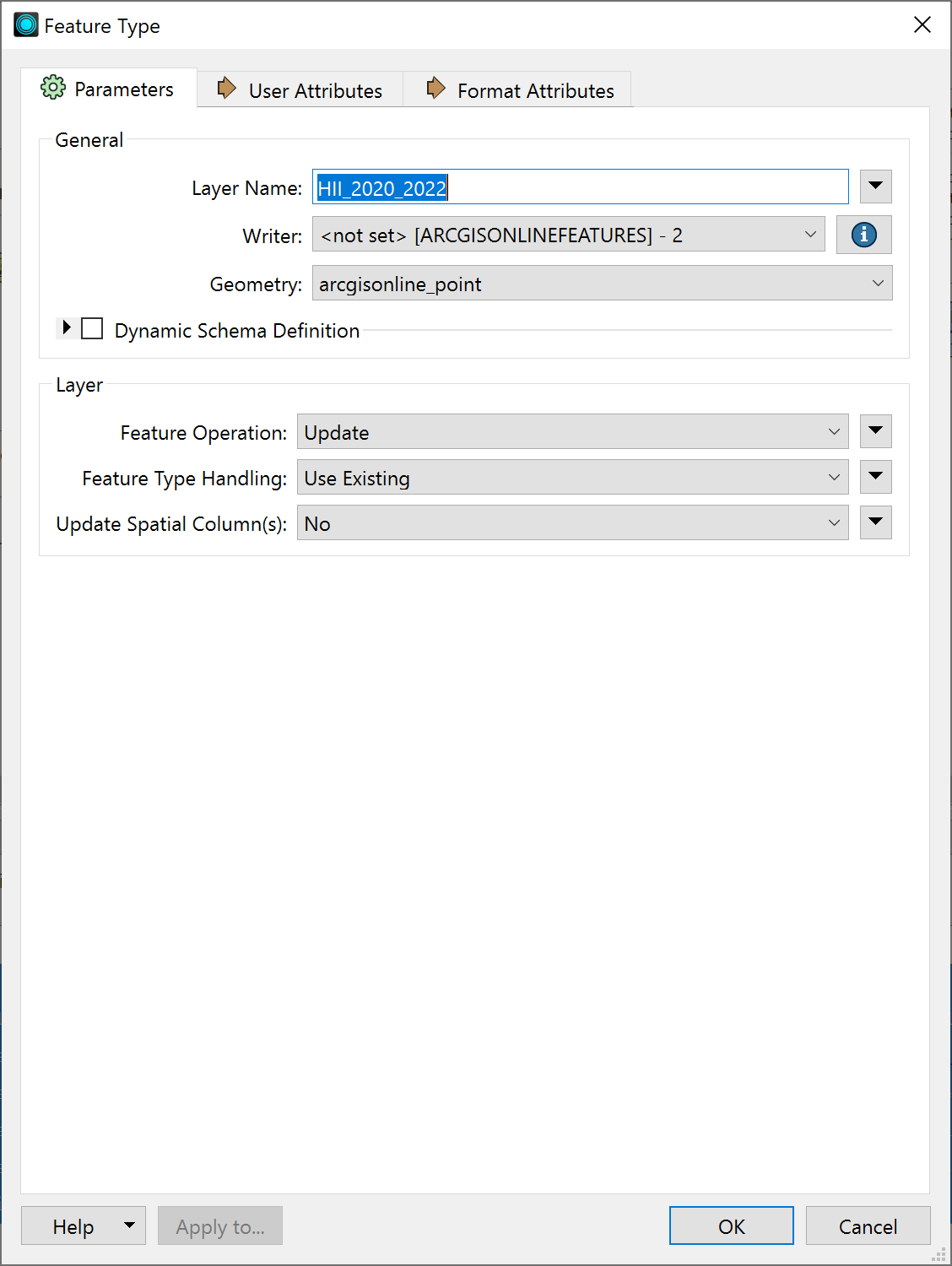

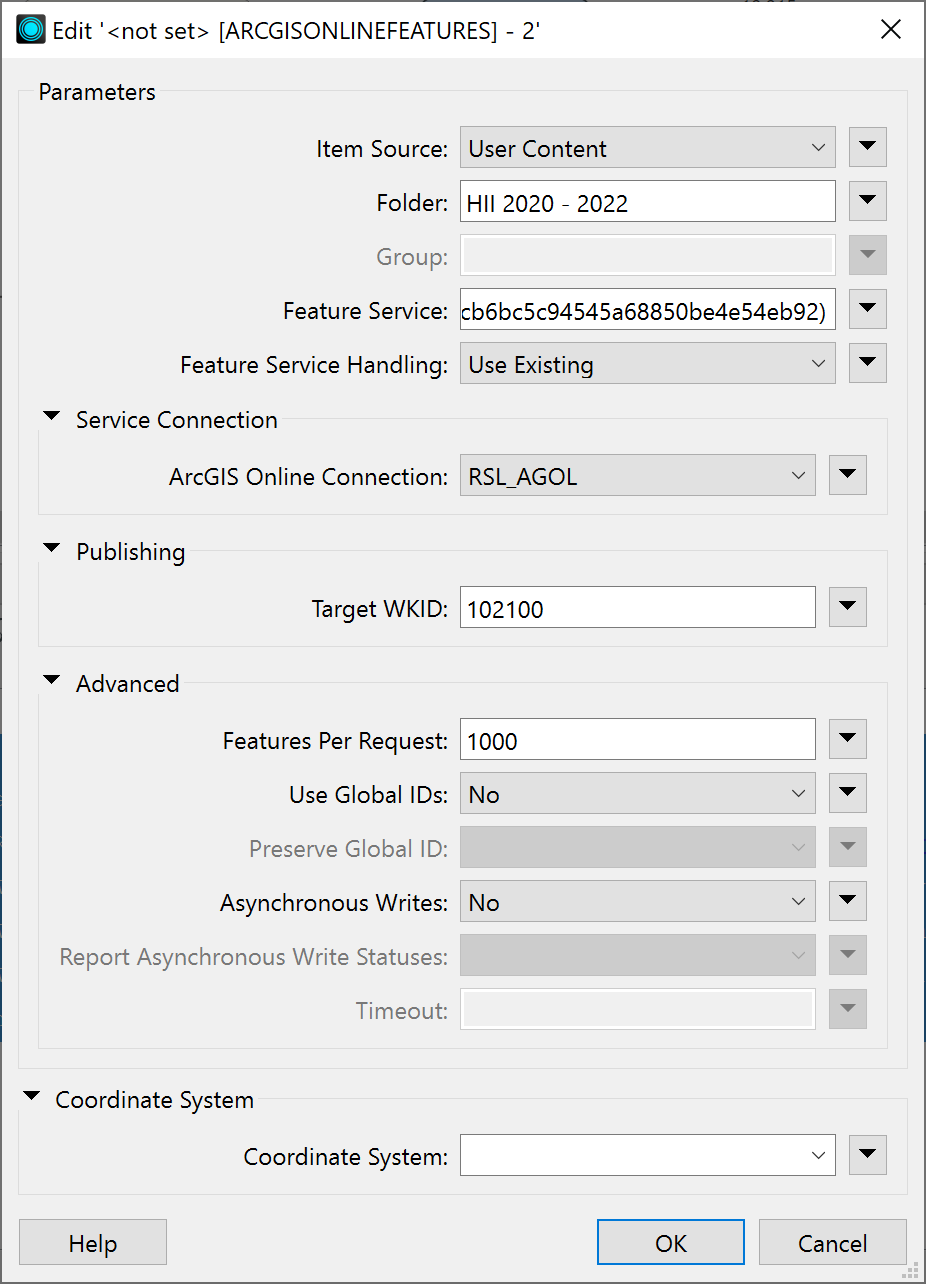

It is basically reading in the original AGOL layer and an excel sheet that has the updated information. I then bring the two together and write the updated information from the excel sheet into the AGOL layer. From there I clean things up and then want to write the updates back into my AGOL layer. Below is what my AGOL writer currently looks like:

I have ensured my AGOL layer is editable by changing the settings in AGOL for that layer. I don’t understand the error messages within FME enough since they seem kind of vague and there isn’t much online either. Any help would be greatly appreciated!

Bec