Hi FME folks,

My first post so go easy on me please :)

We use FME at work for various geo-spatial projects and I've been asked to look into it's C.Vision capabilities. Previously I had a crack at anaconda and some GPU accelerated approaches but the amount of back-end stuff necessary to get started go a little too involved.

I've gone through your video tute @dmitribagh as well as all the workspaces provided and with some solid hrs. of practice I managed to get all of them working. I am somewhat of an an FME newb with less then 50hrs. of usage at a rough guess.

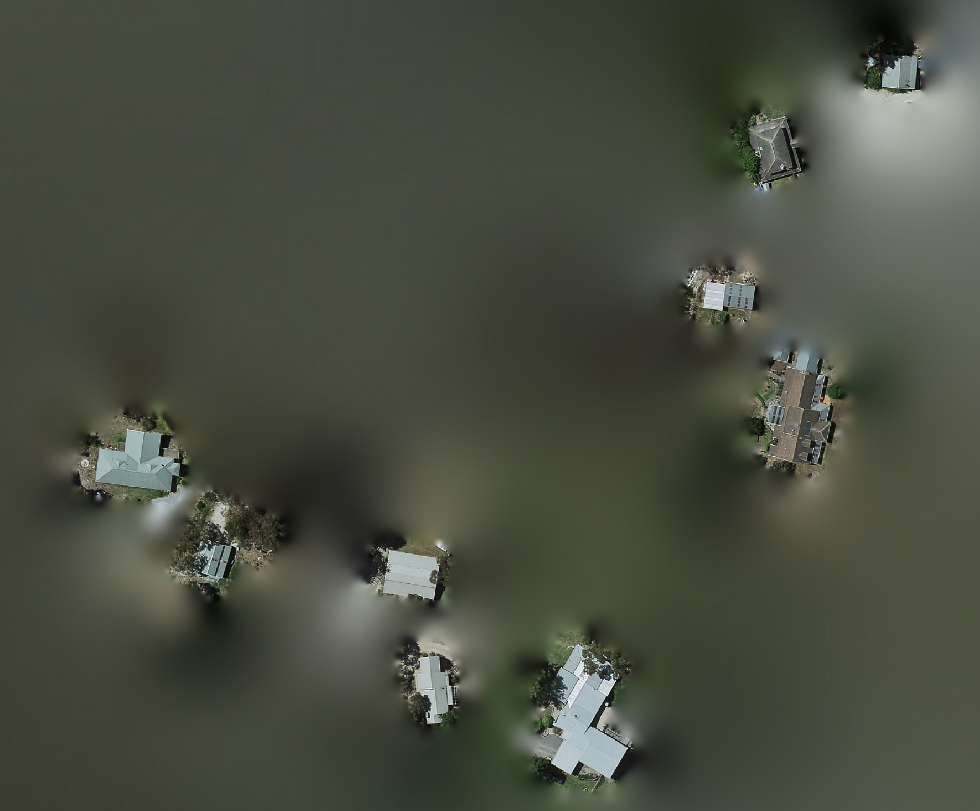

I'm trying to build a model for recognizing houses (roofs) in aerial photography. I've managed to create auto annotations using some footprint data which we have.

Idea being that I need a "clean" image of a single house rather than a house surrounded by other houses, and annotations being rectangles sort of forces you to improvise.

I have a bunch of negatives of empty lots which I manually created ...

... and these are some positives that were auto generated with FME for my initial anaconda tests.

My issue is that I can get the entire process to work, generate the VEC , background TXT and annotations BUT when I output the XML / knowledge files they are always 17kb in size and all have the same content no matter which model I hook up / train.

So I know I'm doing something wrong somewhere and hopefully someone here can point me in the right direction.

Cheers.