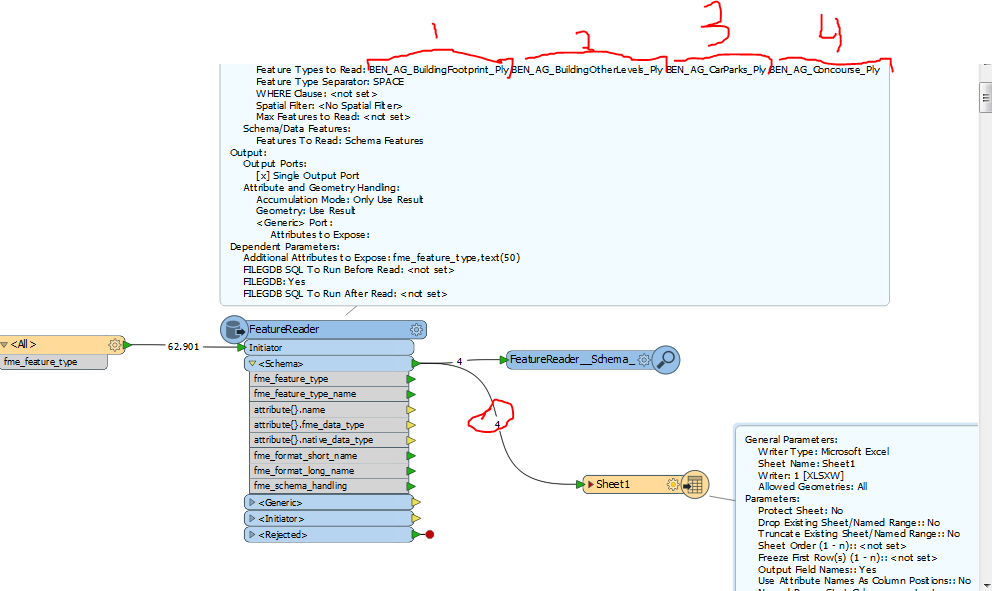

i could do a list of layers of geodatabasae, but if are some layer empty, i don´t know to do read the name of this layer an export to writer.

This post is closed to further activity.

It may be an old question, an answered question, an implemented idea, or a notification-only post.

Please check post dates before relying on any information in a question or answer.

For follow-up or related questions, please post a new question or idea.

If there is a genuine update to be made, please contact us and request that the post is reopened.

It may be an old question, an answered question, an implemented idea, or a notification-only post.

Please check post dates before relying on any information in a question or answer.

For follow-up or related questions, please post a new question or idea.

If there is a genuine update to be made, please contact us and request that the post is reopened.