Hi all, I am experiencing an issue with writing dynamically to SDE for large datasets (i’m unsure of the exact number, but above ~5000 features seems to cause a fail).

My flow reads in a dataset name from an SQL table, matches it to a dataset that has been downloaded as part of a previous flow, checks if it has been updated and if so drops and recreates the Feature Class using a name given in the SQL tables.

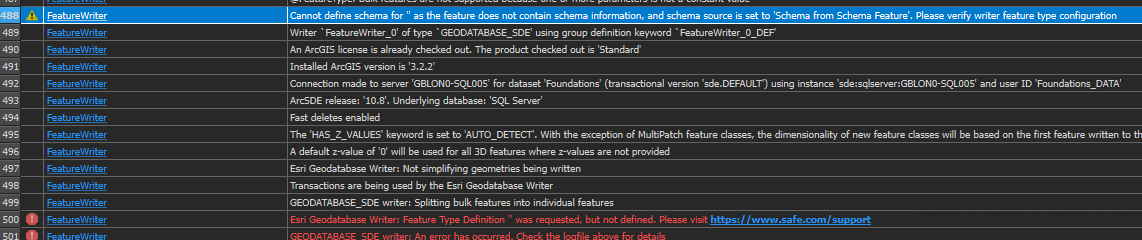

When running the flow in batch using a Workspace Runner, ~half of my datasets ran successfully, and ~half failed to be updated in the SDE. I have looked into this is some depth and the only pattern I can see is the datasets that are failing are larger. The error message I am getting is below. I have tried using a sampler on the data just before the FeatureWriter and the flow works correctly, which validates my findings. What could be the potential reasoning behind this behaviour? or has anyone else experienced similar? If there are any other suggestions of settings I could change to help that would be appreciated!

FME version is 2023.2.1 (Build 23774)