So I learned that *.fts files are part of the ongoing tuning efforts in FME and are written to FMETable subfolder in FME_TEMP directory.

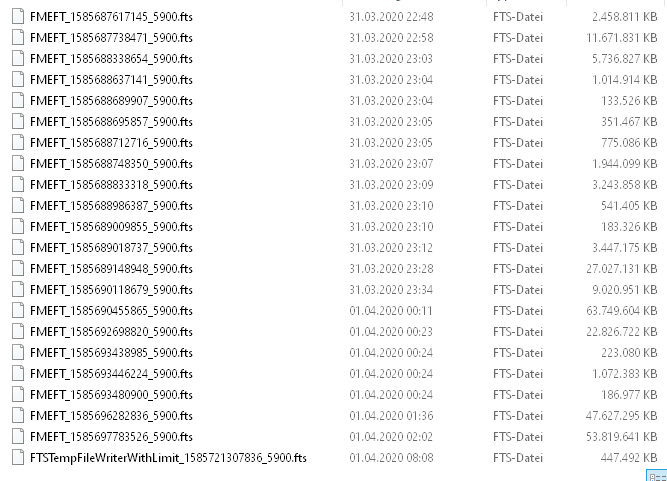

I was literally shocked yesterday when my FME_TEMP drive (256Gb SSD) suddenly ran full because FMETable seemingly "exploded" without limit from reading and processing 5Gb of ffs files.

My impression is that this is a first in FME 2020 (maybe 2019 too ?) as the FME processing active at the time was little more than reading 5Gb (190 different ffs files) and writing them out as a single set of ffs. This process up to now never was in any way critical but now is a blocking point in my environment.

Is it in any way explainable why 60 million features consume 250Gb+ as *.fts files - when they only need 5Gb as ffs files ?

I expect not more than 40-50Gb in the FileGeoDB which will receive this whole dataset !

B.T.W.: Memory use was next to nothing during this whole adventure ...

I came across excessive disk space use when FME writes raster data to disk in the internal ffs (+raster) format.

With all patience and understanding but this behaviour is blowing the roof off my 16-years FME experience ...

Anybody with similar experiences out there ?