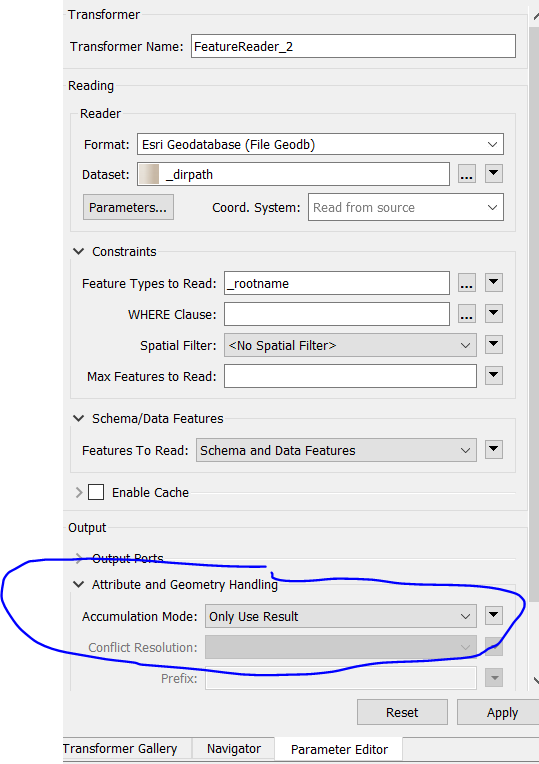

I have set up a file geodatabase reader (ArcObjects) and pointed it to read one feature class in a file geodatabase. The feature class has 50 features. When I run the process, it reads 100 features. I tried deleting one feature from the feature class, and now it reads 99 features, while ArcCatalog shows only 49 features in the feature class. This issue has not been consistent -- sometimes if I change the settings of another file geodatabase reader (pointed at a different file geodatabase) in the workspace, the problematic reader will only read 50 features. I am trying to use dynamic inputs, passed to the reader from a scripted parameter. Why is this happening, and is there an easy solution?

I am using FME through ArcGIS's Data Interoperability extension, version 10.5. It is FME version 2017.0.0.1 (20170316 - Build 17271 - WIN32) and at this time upgrading is not an option for me.