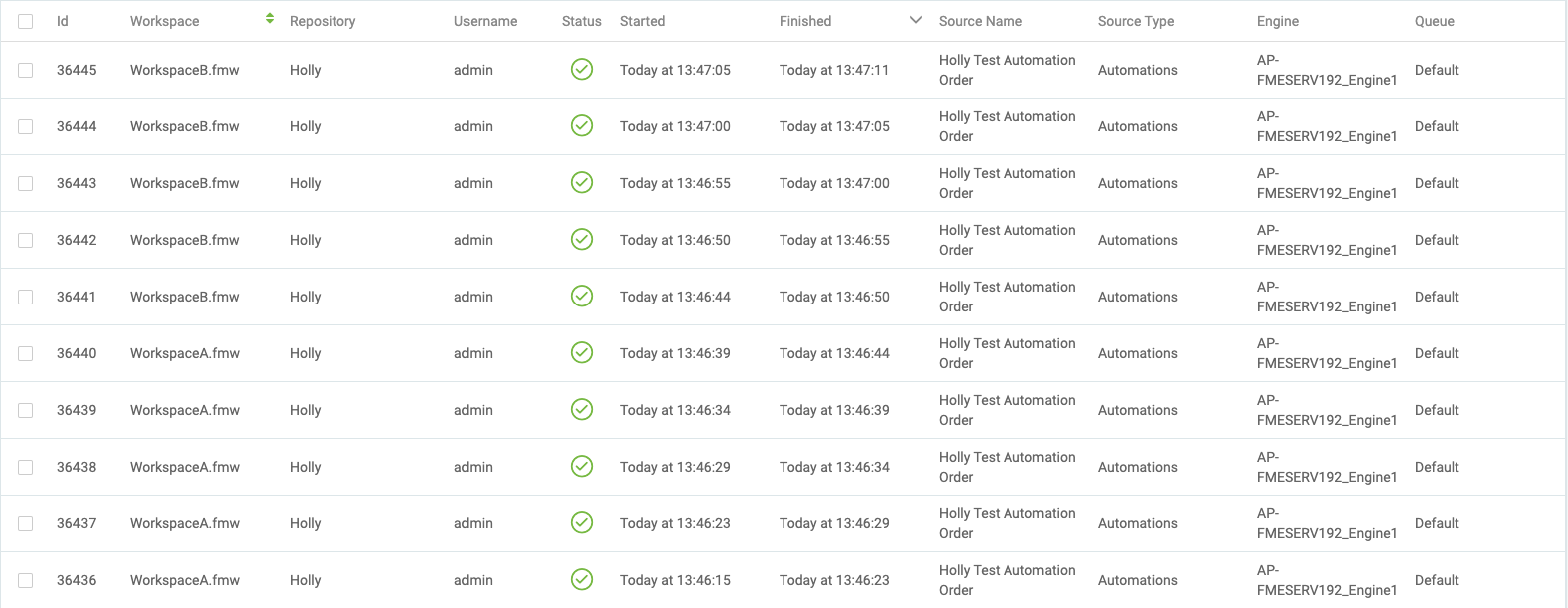

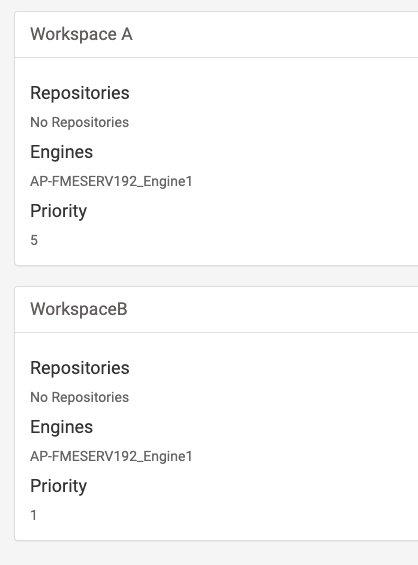

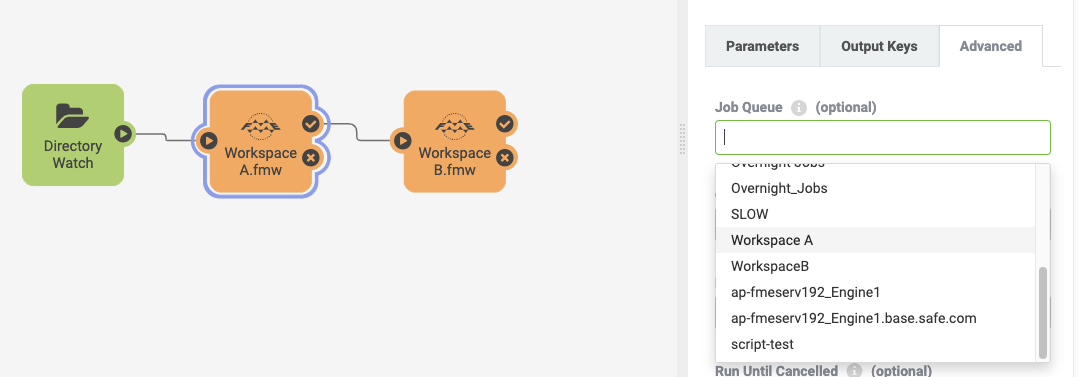

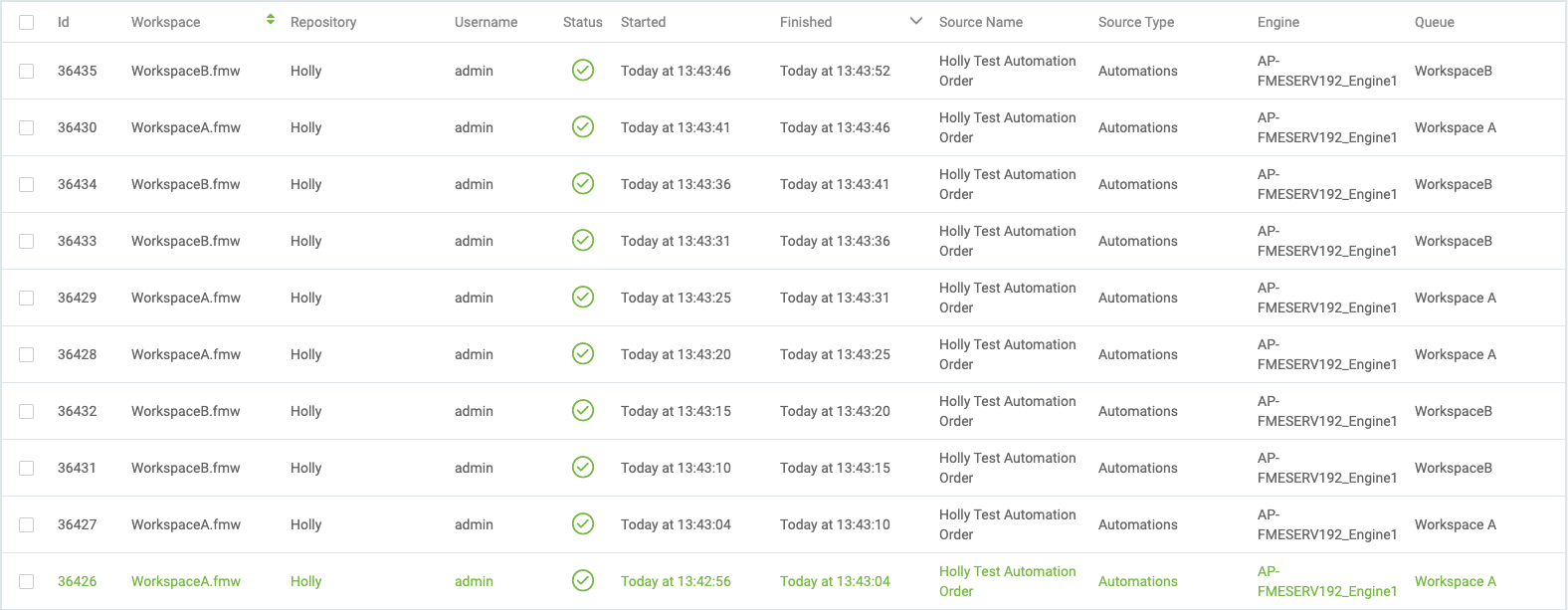

I have an automation workflow (FME Server 2019) built from a number of workspaces that process an input file. The automation is triggered by a file landing in a directory (directory watch), and the data passes through the workspaces and gets loaded to the database at the end (if follows the happy path), else someone gets notified for a fail, etc. This works great for 1 file end-to-end, but when multiple files land in the directory, the first file runs through workspace A, then second file runs through workspace A,.. rather than first file runs through workspace A, then workspace B,.. completing all before second file runs. Is there a way to include this dependency?

I've tried adding a merge action, but this doesn't accept it as everything is in sequence there are no parallel streams, and I'm asking it to run/continue regardless of pass or fail of workspace action.

Is this possible within latest version?

The reason I need one file to be processed end-to-end is because some of the workspaces load data to a staging database and later workspaces expect 'their' data to be in the staging database, not the data of a different file.

Any help much appreciated

Thanks

Mary