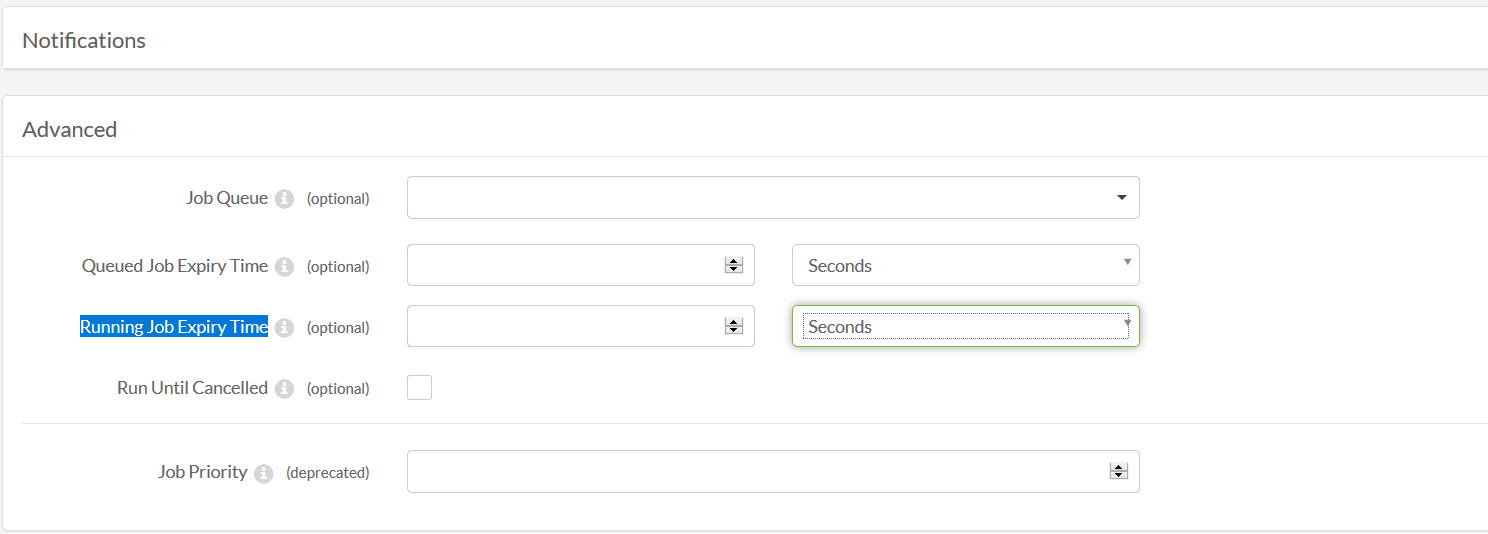

Is it be possible to setup fme scheduler this way that it is executed on given interval but only if previous job submitted by that scheduler has already finished?

For example lets’s say that we have scheduler setup to run once every hour. And for some reason job took more then 1 hour. I would like to execute next job once the previous one is finished and probably shift next submissions times.