Hello Esteemed Community,

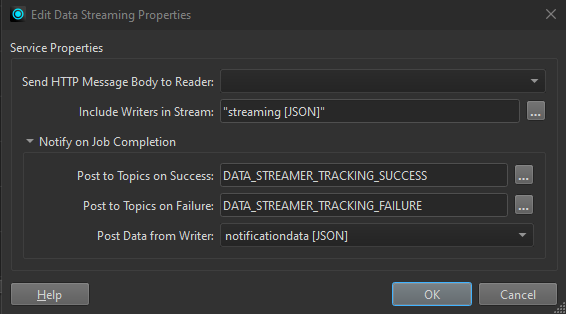

I have been banging my head on this issue and have tried the help documents, this forum and the academy training to find an answer and am still stumped. I am struggling to access data that I submit with a topic through the data streaming service inside my automation via the FME Flow Topic Trigger. I have successfully tried the FMEFlowNotifier transformer just to see if I could get that working, but it does not fit in line with what I am trying to do which is to post to topics on success or failure at the end.

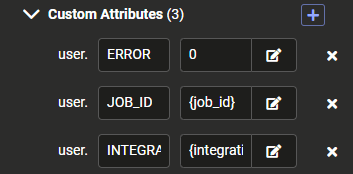

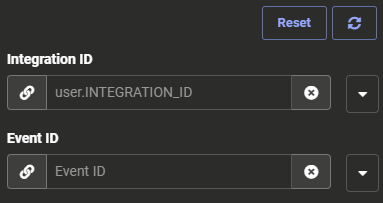

This is what the service properties looks like:

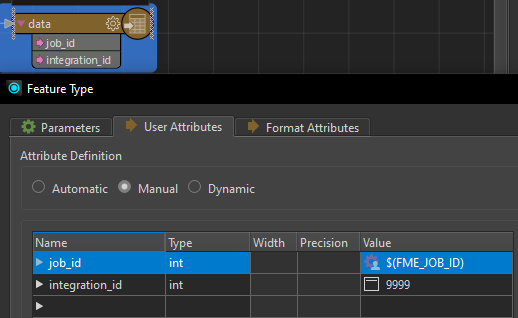

And the JSON writer:

I have tried access the properties both using {job_id} and {data.job_id} but the translation log shows that it uses those values as is.

One of the key issues I have is that I need to set the values to a custom attributes because the parameters I am using them for are number type and it won’t let me type something non-numeric.

I am fairly new to FME, and so I am sure I am either missing something obvious or going about this in the wrong way. Looking forward to hearing back from you veterans. :)

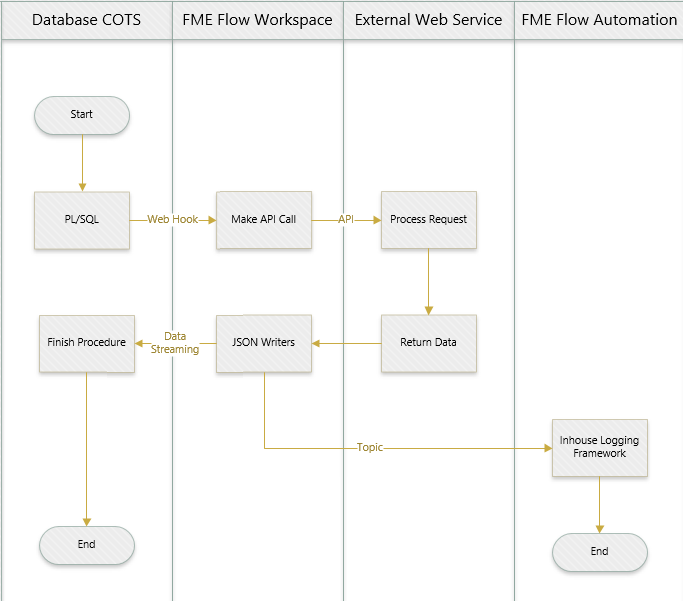

Oh, and this is a proof of concept workspace because I am trying to solve an issue where it seems you cannot use the data streaming service as part of an automation. My use case is that I want an application to call an automation in FME Flow with parameters and receive JSON output once it is done, but I don’t think that is possible. So my work around is to call a workspace using a webhook, have the data streaming service return the data and execute the rest of the automation based on its success or failure. Am I right or wrong about automations not being able to respond directly back to an application that initiated it though a web hook?

Greetings,

Ken