Welcome,

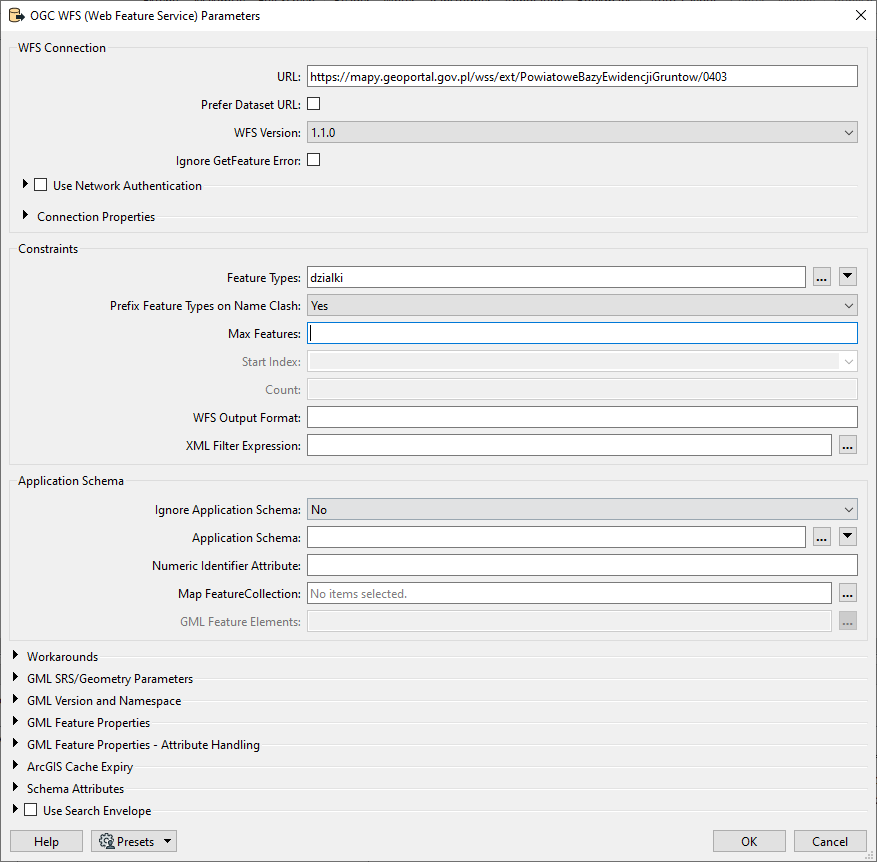

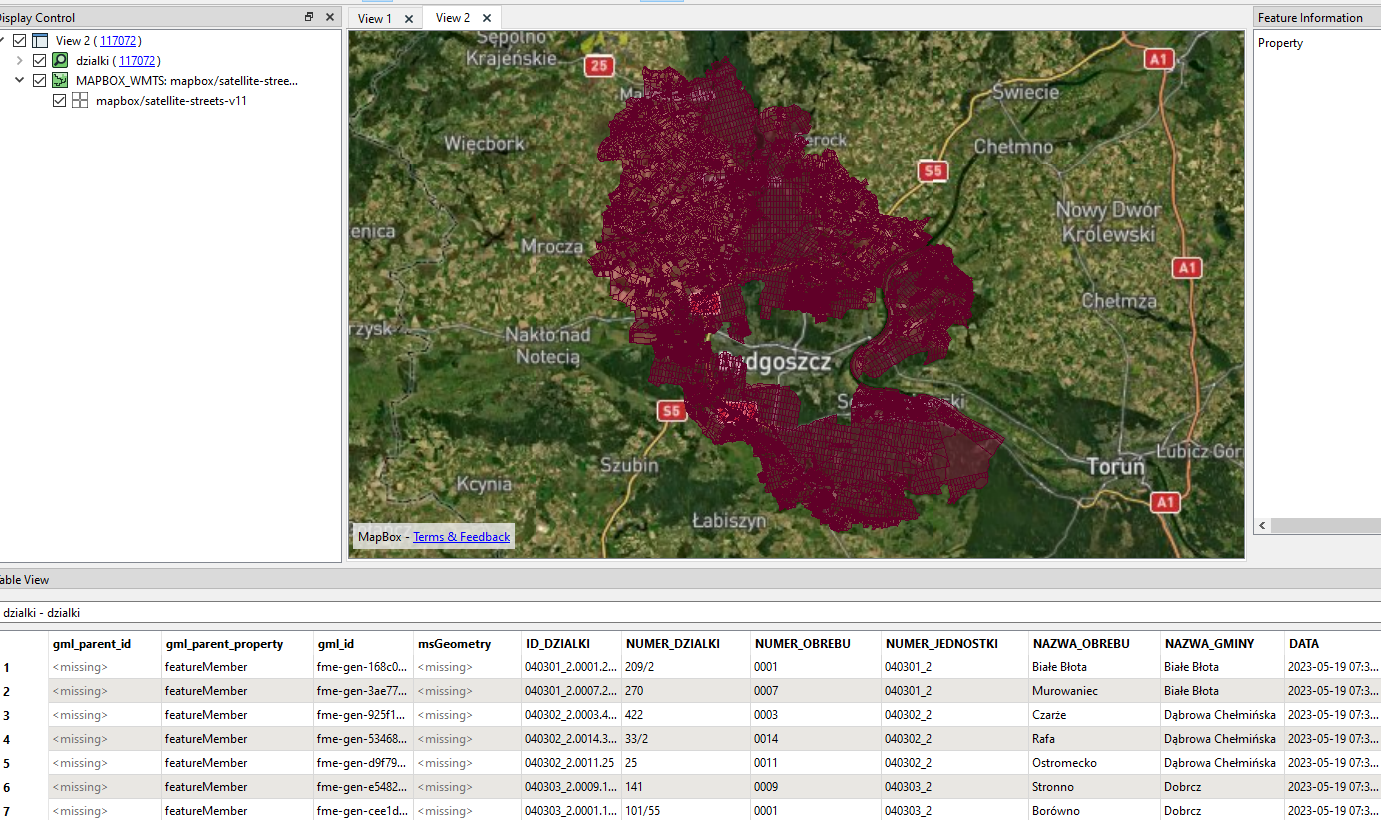

I need to download data from 300 wfs servers (list of it in any format) for 2000 objects (3 km buffer form point) like on a picture.

Is it possible to add a list with server addresses to FME where it will check the spatial extent of the data from the server and then from the polygon range and download the data?