I'm working on a workflows that will run on my FME Cloud and is started by a REST command. The idea is to develop a database driven pdf creator that generates x amount of pdf pages and bundles them a zip.

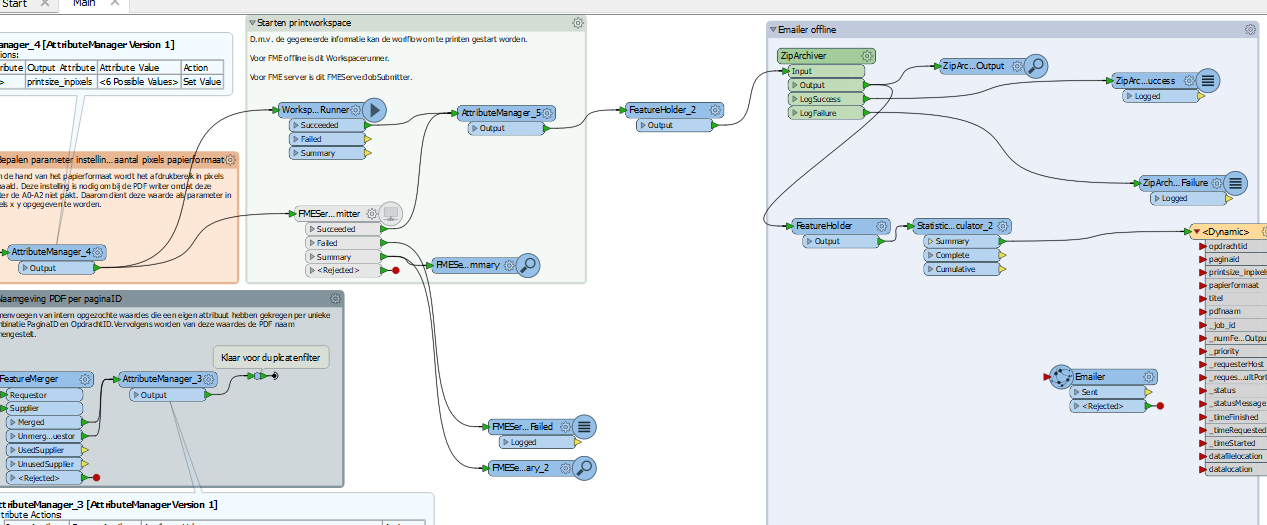

The setup of my workflow is as follow(or should at least work like this):

Step 1: Rest command is send to the cloud with a TaskID and starts the workflow 1.

Step 2: Workflow 1 will get the information in our database that is connected to the TaskID. The table that is fetched contains an x amount of PageID's (depending on how many pages is needed) and each PageID contains the information that is needed to set the paper size, geom layers, WMS stuff, graphs and tables, name of the PDF and other information that is needed.

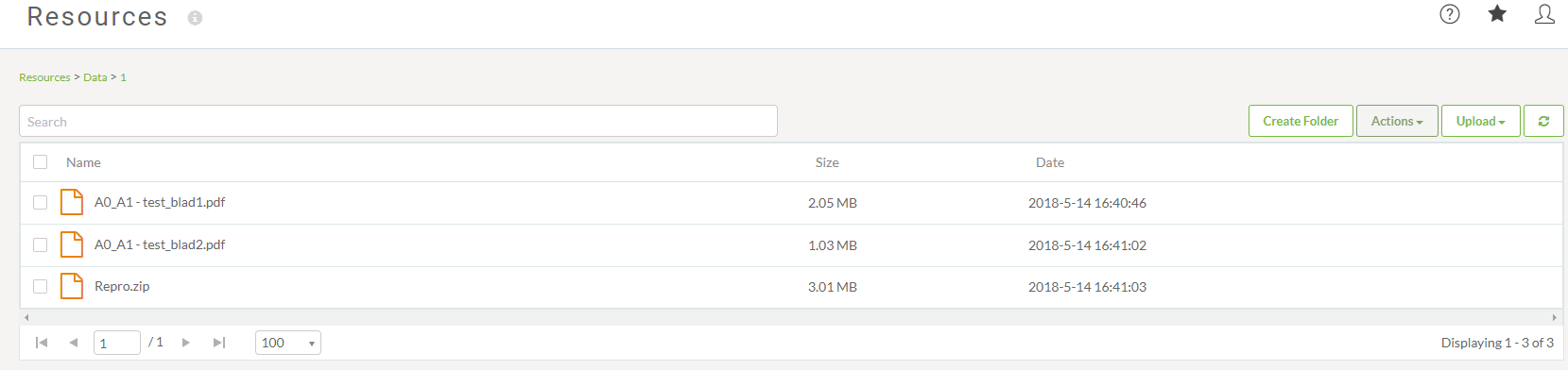

Step 3: For each PageID athere is one line submitted to the FMEServerjobsubmitter (in sequence and waits for job to complete) with the information that is needed to start workflow 2 that builds the pdf out of the table that is generated in step 2. For each PagaID a single pdf is generated (this workflow is tested and works offline and online).

step 4: The finished PDF from workflow 2 should be returned to workflow 1 and bundled with the other x amount of pfds (if PageID is more than 1) into a zip at the end of the workflow and send by email to a email provided in the rest command.

For this flow i still have a problem:

(How) can you use the data (pdf's) that is generated by a FMEServerjobsubmitter and use the files created and combine them into a zipfile?