Hi list.

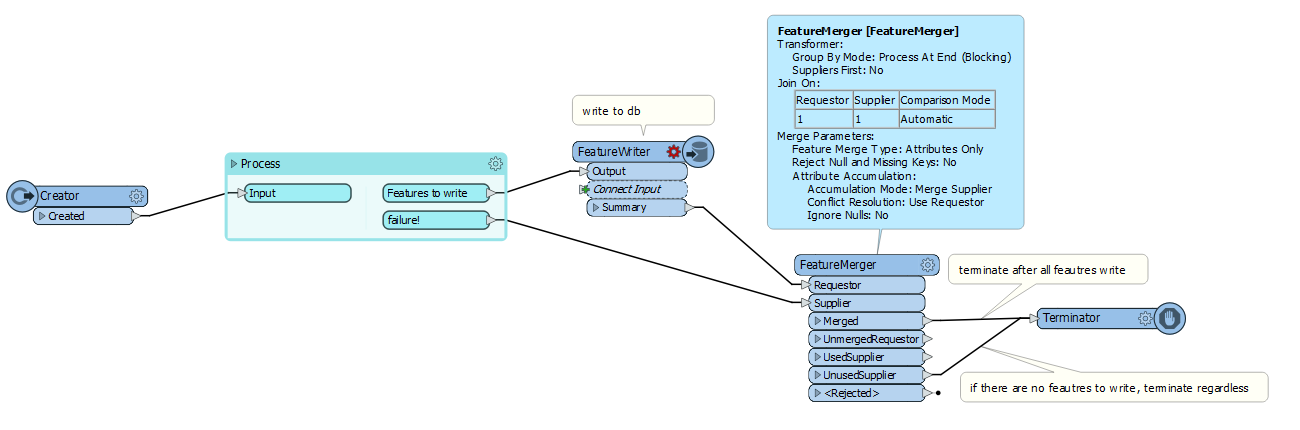

I've built a workspace with a loop looking for information, that I don't know the exact end of. So I loop with a margin, and I save an ID of the last found instance, and check whether I've had too many consecutive fails. If too many fails have been found, I redirect to a Terminator transformer.

However, when I ran the workspace, expecting some output to a database, no output was produced.

It seems that the Terminator transformer rollback previous database inserts. When I disabled the Terminator, all worked as expected.

Is there a way of making a peaceful halt of processing of a workspace, not the "pull-the-plug" kind of behaviour that Terminator seems to perform ?

I.e., is there an option to make the Terminator transformer less "Terminator"-like ??

Cheers