The PythonCreator has been improved in FME 2017.1. The 'input' method has been added and the user can choose creating features before any reader via the 'input' method, creating features after any reader via the 'close' method.

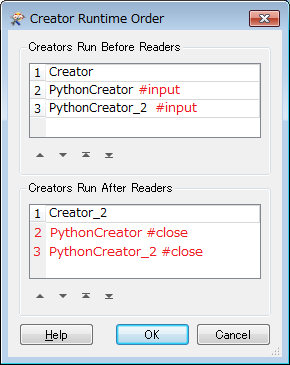

It's a good enhancement, but I noticed that the runtime order of the Creators on the 'close' method cannot be controlled. That is, PythonCreators don't appear in the Creators Run After Readers table in the Creator Runtime Order dialog.

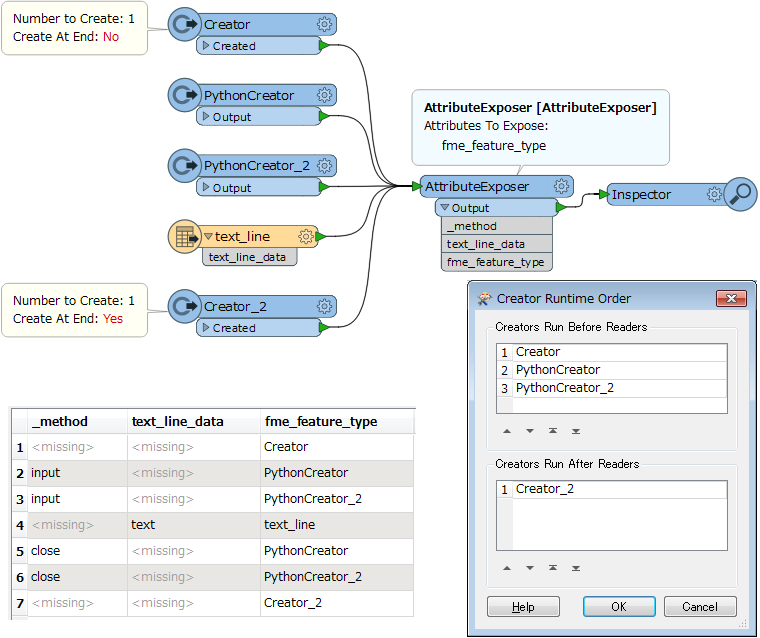

See also the attached demo: (FME 2017.1.2)

import fmeobjects

class FeatureCreator1(object):

def __init__(self):

pass

def input(self,feature):

newFeature = fmeobjects.FMEFeature()

newFeature.setAttribute('_method', 'input')

self.pyoutput(newFeature)

def close(self):

newFeature = fmeobjects.FMEFeature()

newFeature.setAttribute('_method', 'close')

self.pyoutput(newFeature)

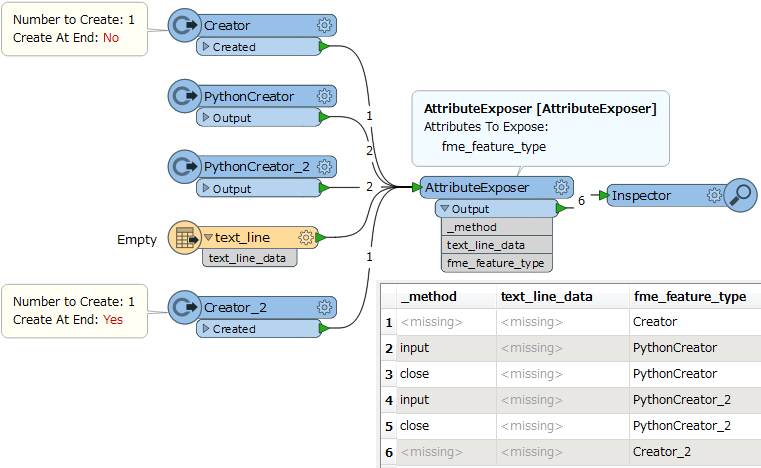

In addition, if the source dataset was empty (i.e. the reader feature type outputs no features), the order of features output from the PythonCreators would be changed.

- When features were output from the reader: input - input - close -close.

- When no features from the reader: input - close - input- close.

It seems to still be a room to improve.