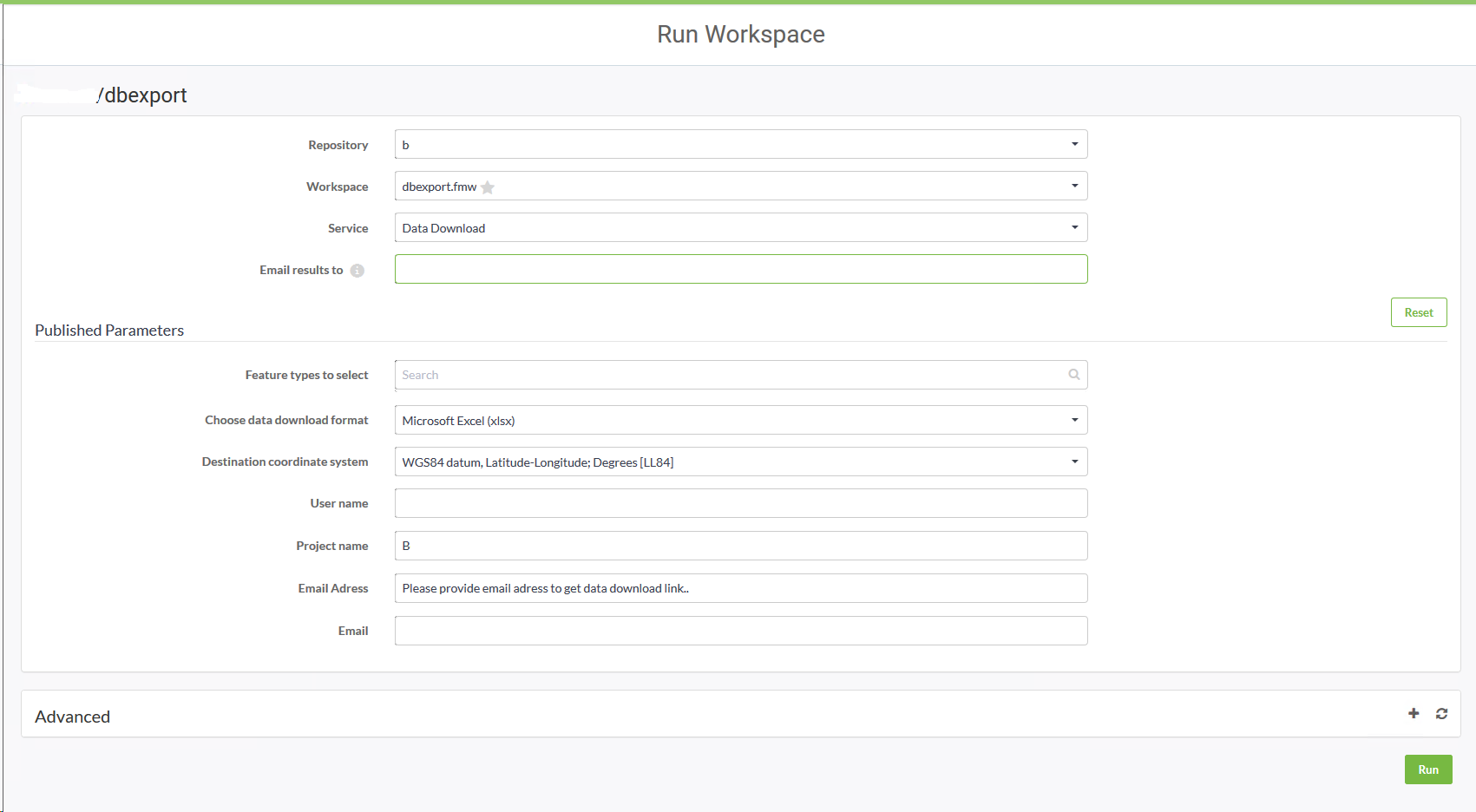

Hello everyone, hope all are doing well. I have following published workflow in FME Server in which we have some parameters.

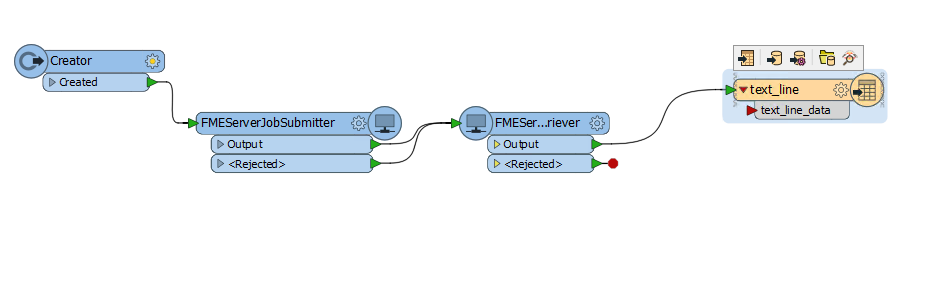

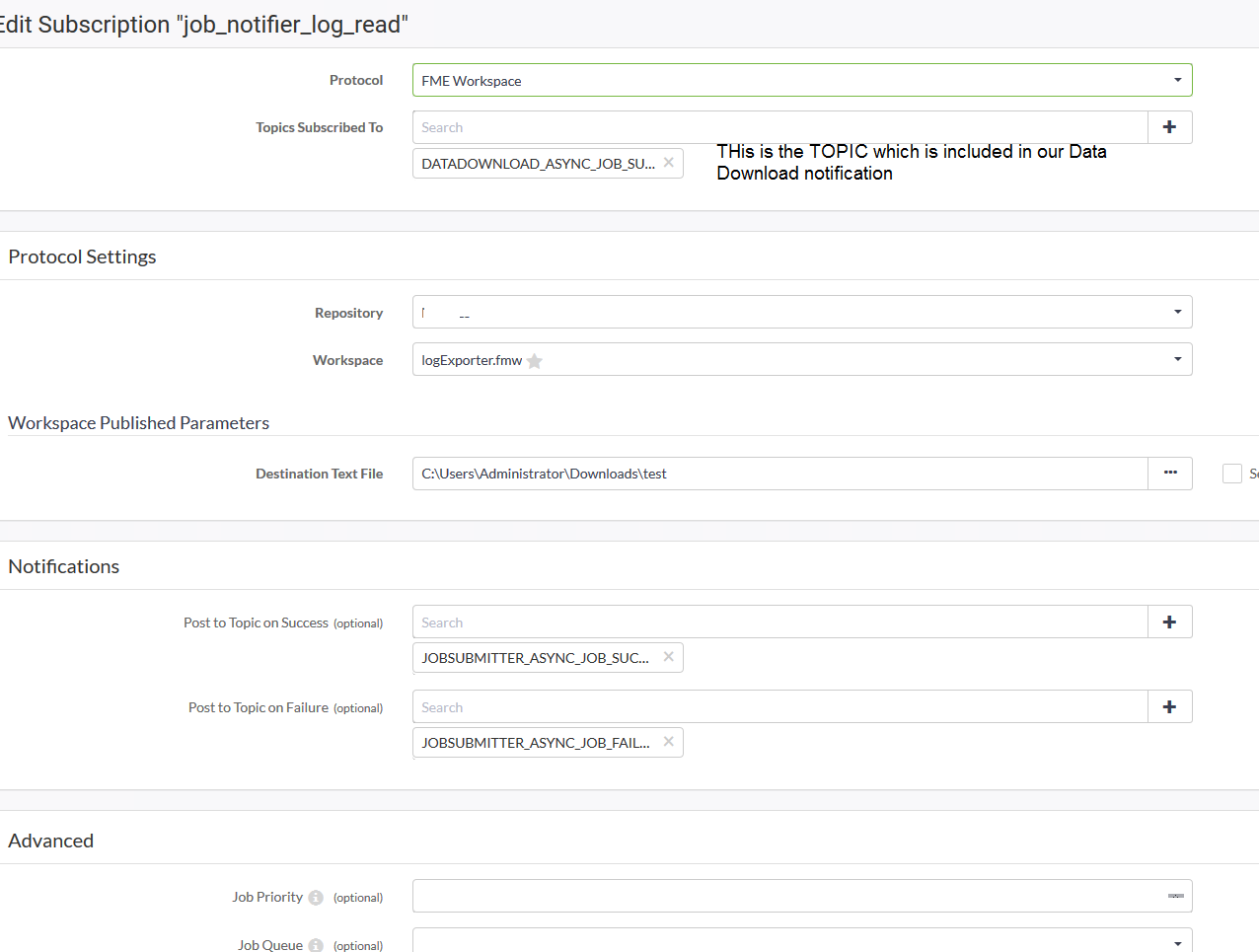

This datadownload service activates through an web application, by login users can download the data in different data format . All the download history now we want to store in a database table. I saw our required information is inside the specific logfile. Can we implement some automated process thus when a job has been finished the respective informations regarding the "email of user", "Datetime", "Datatitle" and "Download Format" will be store inside a table? And this runs automatically, we dont want to run any process.

A detail information is needed. PLease help us in this purpose.

With kind regards

Muqit