Hello,

I’m having a hard time figuring out why a json file created with a Text Writer is not accepted by the GoogleBigQueryConnector.

I have an original JSON Key File downloaded from Google Cloud for the Service Account we are using to authenticate to BigQuery. This JSON file works fine and I am able to authenticate and select the Dataset I want to upload data as well as the table in that Dataset.

The problem is that since I have to use a system environment variable and specify the service account key file location in that variable in order for the GoogleBigQueryConnector to be able to use Service Account instead of Web Connection, it seems the GBQC takes the project_id specified inside that json file and uses it as default project to log you in, which means that even if you have access to different projects in BigQuery you can only access 1 project per Service Account Key File.

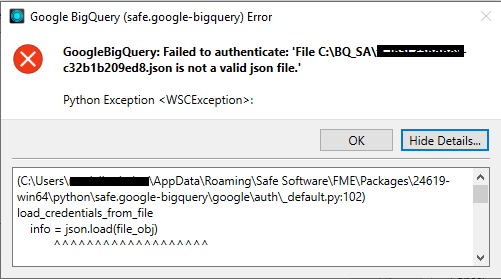

Here is an image of the error I get for when the GBQC tries to use the JSON file to authenticate into BigQuery:

Here is the text with the Details that it shows (can’t really make head or tail of why is not accepting the file as a JSON file:

=============================================================================

(C:\Users\r.z\AppData\Roaming\Safe Software\FME\Packages\24619-win64\python\safe.google-bigquery\google\auth\_default.py:102)

load_credentials_from_file

info = json.load(file_obj)

^^^^^^^^^^^^^^^^^^^

(json\__init__.py:293)

load

(json\__init__.py:346)

loads

(json\decoder.py:337)

decode

(json\decoder.py:355)

raw_decode

(C:\Users\r.z\AppData\Roaming\Safe Software\FME\Packages\24619-win64\python\safe.google-bigquery\fmepy_google_bigquery\auth.py:33)

get_client

return client(client_info=get_client_info(), _http=session, **credentials)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(C:\Users\r.z\AppData\Roaming\Safe Software\FME\Packages\24619-win64\python\safe.google-bigquery\google\cloud\bigquery\client.py:174)

__init__

super(Client, self).__init__(

(C:\Users\r.z\AppData\Roaming\Safe Software\FME\Packages\24619-win64\python\safe.google-bigquery\google\cloud\client.py:249)

__init__

_ClientProjectMixin.__init__(self, project=project)

(C:\Users\r.z\AppData\Roaming\Safe Software\FME\Packages\24619-win64\python\safe.google-bigquery\google\cloud\client.py:201)

__init__

project = self._determine_default(project)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(C:\Users\r.z\AppData\Roaming\Safe Software\FME\Packages\24619-win64\python\safe.google-bigquery\google\cloud\client.py:216)

_determine_default

return _determine_default_project(project)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(C:\Users\r.z\AppData\Roaming\Safe Software\FME\Packages\24619-win64\python\safe.google-bigquery\google\cloud\_helpers.py:186)

_determine_default_project

_, project = google.auth.default()

^^^^^^^^^^^^^^^^^^^^^

(C:\Users\r.z\AppData\Roaming\Safe Software\FME\Packages\24619-win64\python\safe.google-bigquery\google\auth\_default.py:338)

default

credentials, project_id = checker()

^^^^^^^^^

(C:\Users\r.z\AppData\Roaming\Safe Software\FME\Packages\24619-win64\python\safe.google-bigquery\google\auth\_default.py:185)

_get_explicit_environ_credentials

credentials, project_id = load_credentials_from_file(

^^^^^^^^^^^^^^^^^^^^^^^^^^^

(C:\Users\r.z\AppData\Roaming\Safe Software\FME\Packages\24619-win64\python\safe.google-bigquery\google\auth\_default.py:107)

load_credentials_from_file

six.raise_from(new_exc, caught_exc)

(<string>:3)

raise_from

(C:\Users\r.z\AppData\Roaming\Safe Software\FME\Packages\24619-win64\python\safe.google-bigquery\fmepy_google_bigquery\resource_selector.py:70)

getContainerContents

api = GoogleBigQueryApi(get_client_credentials_dict(options), LOG_NAME)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(C:\Users\r.z\AppData\Roaming\Safe Software\FME\Packages\24619-win64\python\safe.google-bigquery\fmepy_google_bigquery\api.py:45)

__init__

self.client = get_client(bigquery.Client, credentials) # type: bigquery.Client

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(C:\Users\r.z\AppData\Roaming\Safe Software\FME\Packages\24619-win64\python\safe.google-bigquery\fmepy_google_bigquery\auth.py:35)

get_client

raise GoogleBigQueryApiError(

(C:\Users\r.z\AppData\Roaming\Safe Software\FME\Packages\24619-win64\python\safe.google-bigquery\fmepy_google_bigquery\resource_selector.py:72)

getContainerContents

raise WSCException(e.message_number, e.message_parameters)

=============================================================================

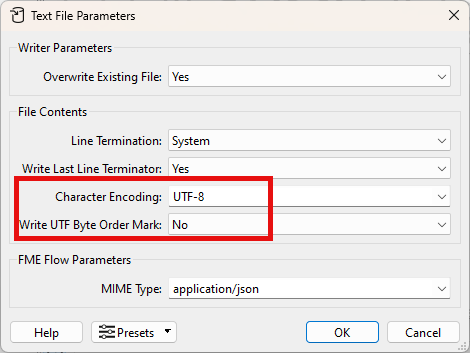

I’ve tried all the different formats to write the text file (which I intentionally save as .json instead of .txt) but still I can never get the GBQC to accept the file written by the Text Writter transformer as a valid JSON file.

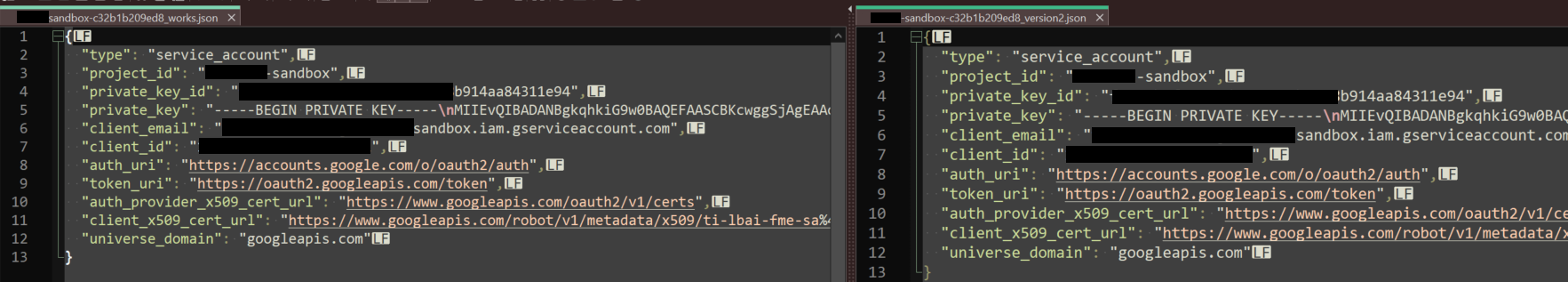

When I compare the original JSON file to the one created by the Text Writer using Notepad++ and show all the characters, I cannot find any difference between the 2:

I’ve tried using a JSONtemplater with JSONFormatter and then write the JSON file with a text writer but still the end result file is not accepted as a valid json file by the GBQC.

Any idea what I might be doing wrong?

Thanks in advance.

Regards,

RZ.-