Hello,

I have a workspace in which I take a lot of data from DB using postGIS reader. Then I try to save it to different files based on fanoutExpression like: output\\@Value(myAttribute).gml

In DB I have 20 GB of data so at some point I get out of memory exception.

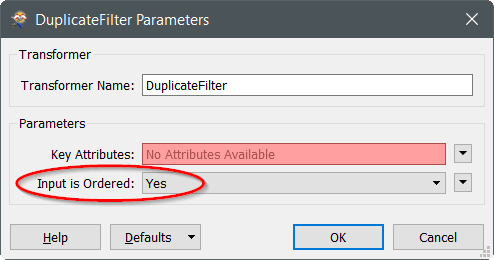

My idea was to first use featureReader to read only myAttribute, then use duplicateFilter and then use FeatureReader with where statement: myAttribute = @Value(myAttribute)

but result is saved to file only when all data is read so I get the same: "out of memory exception."

Is there any way to:

First read all unique myAttribute from given table and then close the connection (because connection timeout may be also a problem)

next for each of those unique myAttribute values read data from given table and save to separate file one by one? This way I think that I won't have out of memory or connection timeout exceptions.

Thanks for any hints on that!