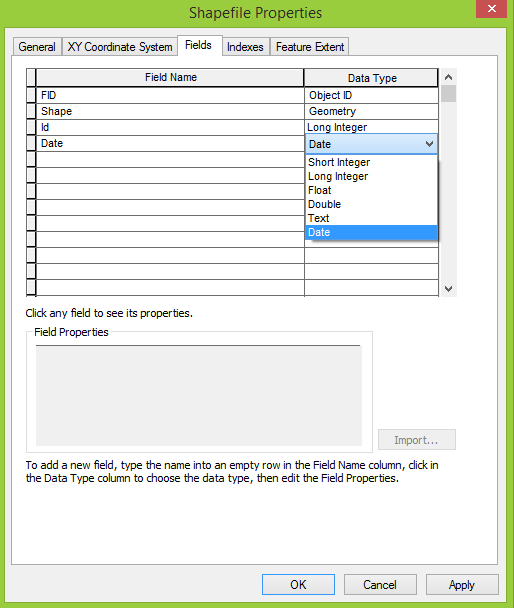

I have the following issue in Dynamic workflow. When converting from GDB to Shapefile, all the date fields (fme_datetime) become strings. I need what those fields stay fme_datetime. How can I solve this issue?

This post is closed to further activity.

It may be an old question, an answered question, an implemented idea, or a notification-only post.

Please check post dates before relying on any information in a question or answer.

For follow-up or related questions, please post a new question or idea.

If there is a genuine update to be made, please contact us and request that the post is reopened.

It may be an old question, an answered question, an implemented idea, or a notification-only post.

Please check post dates before relying on any information in a question or answer.

For follow-up or related questions, please post a new question or idea.

If there is a genuine update to be made, please contact us and request that the post is reopened.