Hi all,

At the moment I have the following situation.

I have a regular, 6*6 grid, from which the gridsize depends on the outcome of a formula which includes several variables:

1. The volume of the underlying pile of sand

2. The surface area (only in xy-direction) from the pile of sand.

In this case, they can be assumed as constants, since the pile of sand stays the same.

The model now calculates the maximum grid size based upon those constants, which is projected over the pile of sand, resulting in a 6*6 overlying grid as mentioned.

Next step: the calculation of the thickness of the sand at the grid points. This is where drills should be taken, until the surface underneath the pile of sand is reached. This is called drill depth. The sum of the total drill depth should be 100m or above (by law), but as small as possible to reduce labour costs.

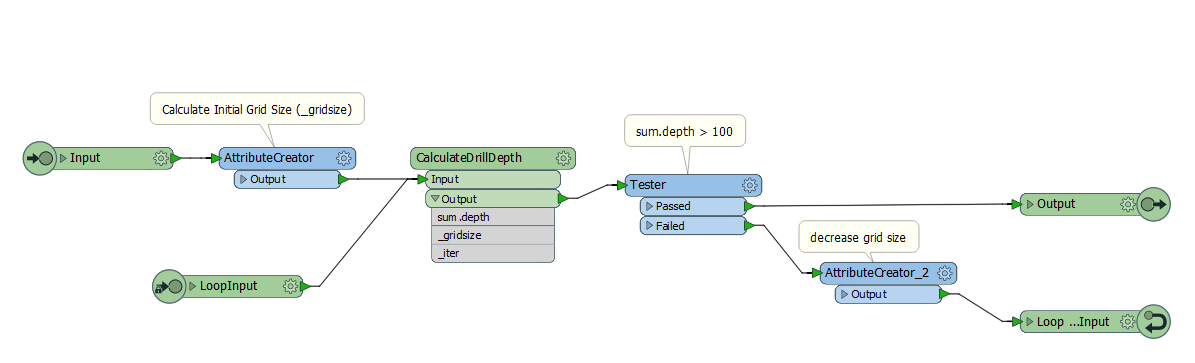

Problem: when I run the model and the total drill depth is e.g. 96m (as in this example), I want to rerun this whole process with a smaller grid size, resulting in more drills, and a higher total drilling depth. This can be done by simply adjusting the grid size formula in [initial]-[0.1] for example.

Current situation: Now, I have multiple, long-chaing processes which only differ at the onset, [initial]-[0.2], [initial]-[0.3] etc., which all run correctly, and are tested, merged, filtered and so on in the end, to keep only the right grid points: the ones with the smallest total depth, but exceeding 100m. These points are the outcome of one of the "loops", which are now all run. But there must be a more elegant way to loop this process, since for now, I am just using brutal force to determine the right grid size based upon the outcome of multiple parallel transformer processes. My whole model works fine, but there must be a way to loop things in an easier way than just copy-paste long transformer chains which are all run, even when it is unnecessary to do so.

Any suggestions on looping a process in which I only need to change an attribute formula in the beginning of the process according to the outcome of a simple test (if sum.depth <=100 then gridsize = [initial]-0.1], then if sum.depth <=100 then gridsize = [initial]-0.2, etc) and end the process if the right grid size is found, would be appreciated.

An iterator which subtracts a certain value per looping process would be great.

An example:

1st run: use [initial] grid size, as calculated by the original formula

If not met the requirement:

2nd run: use [initial] - [0.1]

If still not met the requirement:

3rd run: use [initial] - [0.2]

And so on. And terminate process when requirement is met (drill.depth >=100).

In case my problem is too complex: information about looping a process after a test and ending it when it meets the requirement in the tester would also be helpful, that's actually all I need to know. The model works fine, but the speed and efficiency could be improved when I don't have to bring in a loop manually like I'm doing now.

Thank you in advance,

Martin